A lot of sources provide implementation of spin lock in CUDA:

- https://devtalk.nvidia.com/default/topic/1014009/try-to-use-lock-and-unlock-in-cuda/

- Cuda Mutex, why deadlock?

- How to implement Critical Section in cuda?

- Implementing a critical section in CUDA

- https://wlandau.github.io/gpu/lectures/cudac-atomics/cudac-atomics.pdf.

They follow the same pattern:

- LOCK: wait for the atomic change of the value of lock from 0 to 1

- do some critical operations

- UNLOCK: release the lock by setting its value to 0

Let's assume that we don't have warp-divergence or, in other words, we don't use locks for interwarp synchronization.

What is the right way to implement step 1?

Some answers propose to use atomicCAS while other atomicExch. Are both equivalent?

while (0 != (atomicCAS(&lock, 0, 1))) {}

while (atomicExch(&lock, 1) != 0) {}

What is the right way to implement step 3?

Almost all sources propose to use atomicExch for that:

atomicExch(&lock, 0);

One user proposed an alternative (Implementing a critical section in CUDA) that also make sense, but it doesn't work for him (so probably it leads to Undefined Behavior in CUDA):

lock = 0;

It seems that for general spin lock on CPU it is valid to do that: https://stackoverflow.com/a/7007893/8044236. Why we can't use it in CUDA?

Do we have to use memory fence and volatile specifier for memory accesses in step 2?

CUDA docs about atomics (https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html#atomic-functions) say that they don't guarantee ordering constraints:

Atomic functions do not act as memory fences and do not imply synchronization or ordering constraints for memory operations

Does it mean that we have to use a memory fence at the end of the critical section (2) to ensure that change inside a critical section (2) made visible to other threads before unlocking (3)?

Do CUDA guarantee that other threads will ever see the changes made by a thread with atomic operations in steps (1) and (3)?

This is not true for memory fences (https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html#memory-fence-functions):

Memory fence functions only affect the ordering of memory operations by a thread; they do not ensure that these memory operations are visible to other threads (like __syncthreads() does for threads within a block (see Synchronization Functions)).

So probably it is also not true for atomic operations? If yes, all spinlock implementations in CUDA rely on UB.

How we can implement reliable spinlock in the presence of warps?

Now, provided that we have answers to all the questions above, let's remove the assumption that we don't have warp divergence. Is it possible to implement spinlock in such a case?

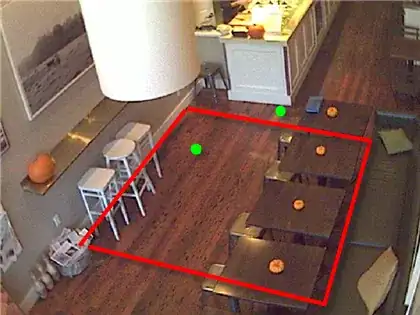

The main issue (deadlock) is represented at slide 30 of https://wlandau.github.io/gpu/lectures/cudac-atomics/cudac-atomics.pdf:

Is the only option to replace while loop by if in step (1) and enclose all 3 steps in single while loop as proposed, for example, in Thread/warp local lock in cuda or CUDA, mutex and atomicCAS()?