I think thebluephantom's answer is right.

I had faced same situation with you until today, and i also found answers only saying Spark cache() does not work on single query.

And also my spark job executing single query seems not caching.

Because of them, i was also doubted for he's answer.

But he showed evidences for cache is working with a green box even he execute single query.

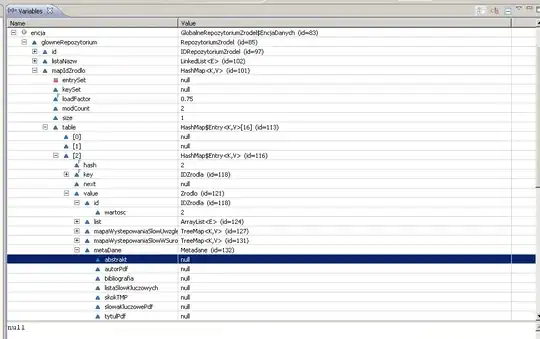

So, i tested 3 cases with dataframe(not RDD) like below and the results seems he is right.

And execution plan is also changed (more simple and use InMemoryRelation, please see the below).

- without cache

- using cache

- using cache with calling unpersist before action

without cache

example

val A = spark.read.format().load()

val B = A.where(cond1).select(columns1)

val C = A.where(cond2).select(columns2)

val D = B.join(C)

D.save()

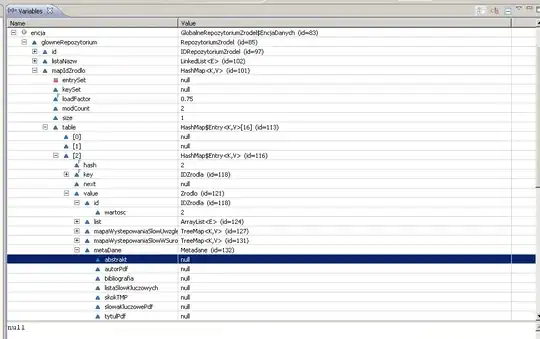

DAG for my case

This is a bit more complicated than example.

This DAG is messy even though there is no complicated execution.

And you can see the scan is occured 4 times.

with cache

example

val A = spark.read.format().load().cache() // cache will be working

val B = A.where(cond1).select(columns1)

val C = A.where(cond2).select(columns2)

val D = B.join(C)

D.save()

This will cache A, even single query.

You can see DAG that read InMemoryTableScan twice.

DAG for my case

with cache and unpersist before action

val A = spark.read.format().load().cache()

val B = A.where(cond1).select(columns1)

val C = A.where(cond2).select(columns2)

/* I thought A will not be needed anymore */

A.unpersist()

val D = B.join(C)

D.save()

This code will not cache A dataframe, because it was unset cache flag before starting action. (D.save())

So, this will result in exactly same with first case (without cache).

Important thing is unpersist() must be written after action(after D.save()).

But when i ask some people in my company, many of them used like case 3 and didn't know about this.

I think that's why many people misunderstand cache is not working on single query.

cache and unpersist should be like below

val A = spark.read.format().load().cache()

val B = A.where(cond1).select(columns1)

val C = A.where(cond2).select(columns2)

val D = B.join(C)

D.save()

/* unpersist must be after action */

A.unpersist()

This result exactly same with case 2 (with cache, but unpersist after D.save()).

So. I suggest try cache like thebluephantom's answer.

If i present any incorrection. please note that.

Thanks to thebluephantom's for solving my problem.