I'm quite new to spark, I've imported pyspark library to pycharm venv and write below code:

# Imports

from pyspark.sql import SparkSession

# Create SparkSession

spark = SparkSession.builder \

.appName('DataFrame') \

.master('local[*]') \

.getOrCreate()

spark.conf.set("spark.sql.shuffle.partitions", 5)

path = "file_path"

df = spark.read.format("avro").load(path)

, everything seems to be okay but when I want to read avro file I get message:

pyspark.sql.utils.AnalysisException: 'Failed to find data source: avro. Avro is built-in but external data source module since Spark 2.4. Please deploy the application as per the deployment section of "Apache Avro Data Source Guide".;'

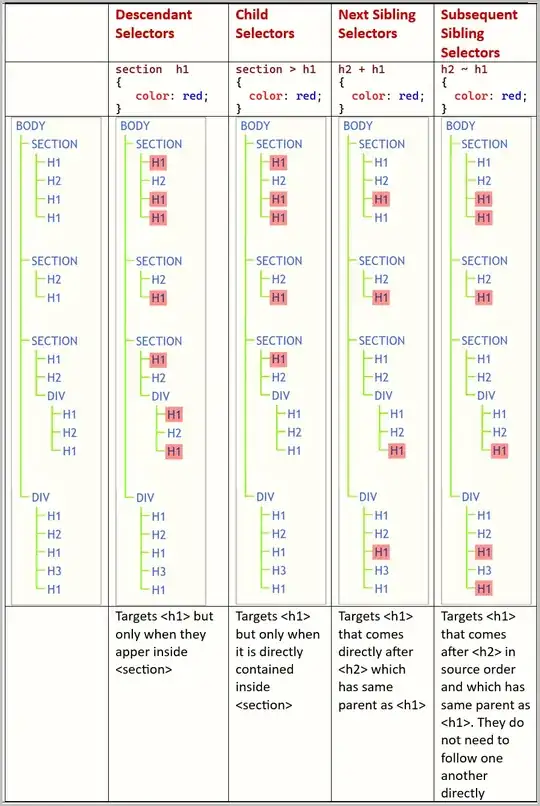

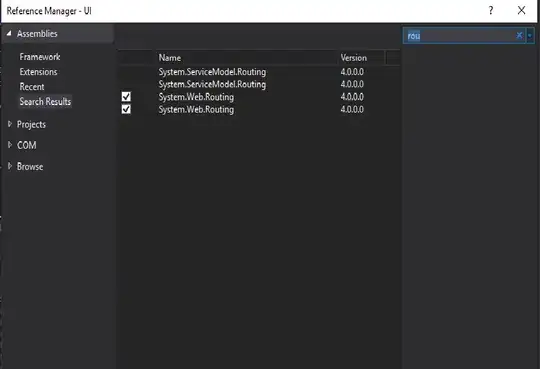

When I go to this page: >https://spark.apache.org/docs/latest/sql-data-sources-avro.html there is something like this:

and I have no idea have to implement this, download something in PyCharm or you have to find external files to modify?

Thank you for help!

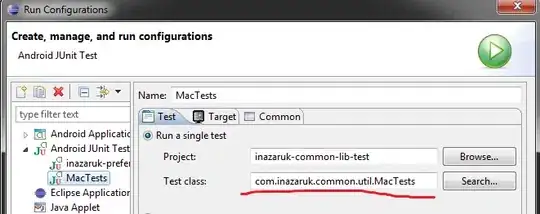

Update (2019-12-06): Because I'm using Anaconda I've opened Anaconda prompt and copied this code:

pyspark --packages com.databricks:spark-avro_2.11:4.0.0

It downloaded some modules, then I've got back to PyCharm and same error appears.