I'm trying to scrape data from Google translate for educational purpose.

Here is the code

from urllib.request import Request, urlopen

from bs4 import BeautifulSoup

#https://translate.google.com/#view=home&op=translate&sl=en&tl=en&text=hello

#tlid-transliteration-content transliteration-content full

class Phonetizer:

def __init__(self,sentence : str,language_ : str = 'en'):

self.words=sentence.split()

self.language=language_

def get_phoname(self):

for word in self.words:

print(word)

url="https://translate.google.com/#view=home&op=translate&sl="+self.language+"&tl="+self.language+"&text="+word

print(url)

req = Request(url, headers={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:71.0) Gecko/20100101 Firefox/71.0'})

webpage = urlopen(req).read()

f= open("debug.html","w+")

f.write(webpage.decode("utf-8"))

f.close()

#print(webpage)

bsoup = BeautifulSoup(webpage,'html.parser')

phonems = bsoup.findAll("div", {"class": "tlid-transliteration-content transliteration-content full"})

print(phonems)

#break

The problem is when gives me the html, there is no tlid-transliteration-content transliteration-content full class, of css.

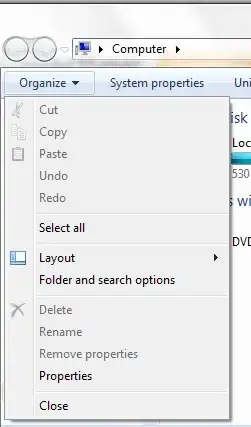

But using inspect, I have found that, phoneme are inside this css class, here take a snap :

I have saved the html, and here it is, take a look, no tlid-transliteration-content transliteration-content full is present and it not like other google translate page, it is not complete. I have heard google blocks crawler, bot, spyder. And it can be easily detected by their system, so I added the additional header, but still I can't access the whole page.

How can I do so ? Access the whole page and read all data from google translate page?

Want to contribute on this project?

I have tried this code below :

from requests_html import AsyncHTMLSession

asession = AsyncHTMLSession()

lang = "en"

word = "hello"

url="https://translate.google.com/#view=home&op=translate&sl="+lang+"&tl="+lang+"&text="+word

async def get_url():

r = await asession.get(url)

print(r)

return r

results = asession.run(get_url)

for result in results:

print(result.html.url)

print(result.html.find('#tlid-transliteration-content'))

print(result.html.find('#tlid-transliteration-content transliteration-content full'))

It gives me nothing, till now.