I'm running an Amazon EC2 (Ubuntu) instance which outputs a JSON file daily. I am now trying to copy this JSON to Amazon S3 so that I can eventually download it to my local machine. Following the instructions here (reading in a file from ubuntu (AWS EC2) on local machine?), I'm using boto3 to copy the JSON from Ubuntu to S3:

import boto3

print("This script uploads the SF events JSON to s3")

ACCESS_ID = 'xxxxxxxx'

ACCESS_KEY = 'xxxxxxx'

s3 = boto3.resource('s3',

aws_access_key_id=ACCESS_ID,

aws_secret_access_key= ACCESS_KEY)

def upload_file_to_s3(s3_path, local_path):

bucket = s3_path.split('/')[2]

print(bucket)

file_path = '/'.join(s3_path.split('/')[3:])

print(file_path)

response = s3.Object(bucket, file_path).upload_file(local_path)

print(response)

s3_path = "s3://mybucket/sf_events.json"

local_path = "/home/ubuntu/bandsintown/sf_events.json"

upload_file_to_s3(s3_path, local_path)

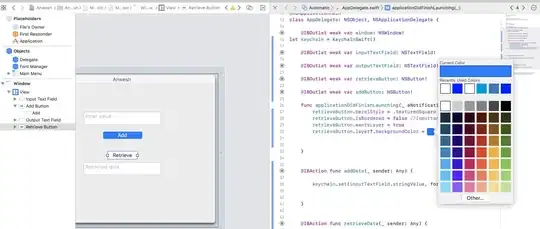

The credentials I'm using here are from creating a new user in Amazon Identity and Access Management (IAM): screenshot attached.

However, when I run this script, I get the following error:

boto3.exceptions.S3UploadFailedError: Failed to upload /home/ubuntu/bandsintown/sf_events.json to mybucket/sf_events.json: An error occurred (AccessDenied) when calling the PutObject operation: Access Denied

I've also tried attaching an IAM role to the EC2 instance and given that role full s3 permissions - but still no luck (see image below).

It appears to be a permissions issues - how might I begin to solve this? Do I need Amazon CLI? I'm also reading in boto3 documentation that I may need an aws_session_token parameter in my script.