Ex-post remark: see the benchmarked Vass' proposed performance edge on the hosted eco-system, would be great to reproduce this on multi-locale silicon so as to prove it universal for larger scales...

Performance?

Benchmark! ... always, no exceptions, no excuse - surprises not seldom -ccflags -O3

This is what makes chapel so great. Thanks a lot the Chapel team for developing and improving such great computing tool for the HPC over more than the last decade.

With a full love in true-[PARALLEL] efforts, the performance is always a result of both the design practices and underlying system hardware, never a just syntax-constructor granted "bonus".

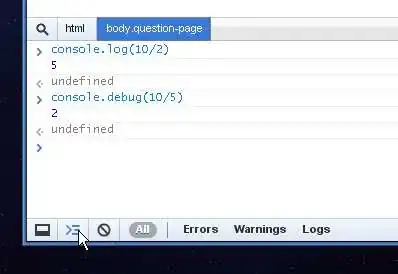

Using a TiO.run online IDE for demo-test, the results for a single locale silicon are these:

TiO.run platform uses 1 numLocales,

having 2 physical CPU-cores accessible (numPU-s)

with 2 maxTaskPar parallelism limit

For grid{1,2,3}[ 128, 128] the tested forall sum-product took 3.124 [us] incl. fillRandom()-ops

For grid{1,2,3}[ 128, 128] the tested forall sum-product took 2.183 [us] excl. fillRandom()-ops

For grid{1,2,3}[ 128, 128] the Vass-proposed sum-product took 1.925 [us] excl. fillRandom()-ops

For grid{1,2,3}[ 256, 256] the tested forall sum-product took 28.593 [us] incl. fillRandom()-ops

For grid{1,2,3}[ 256, 256] the tested forall sum-product took 25.254 [us] excl. fillRandom()-ops

For grid{1,2,3}[ 256, 256] the Vass-proposed sum-product took 21.493 [us] excl. fillRandom()-ops

For grid{1,2,3}[1024,1024] the tested forall sum-product took 2.658.560 [us] incl. fillRandom()-ops

For grid{1,2,3}[1024,1024] the tested forall sum-product took 2.604.783 [us] excl. fillRandom()-ops

For grid{1,2,3}[1024,1024] the Vass-proposed sum-product took 2.103.592 [us] excl. fillRandom()-ops

For grid{1,2,3}[2048,2048] the tested forall sum-product took 27.137.060 [us] incl. fillRandom()-ops

For grid{1,2,3}[2048,2048] the tested forall sum-product took 26.945.871 [us] excl. fillRandom()-ops

For grid{1,2,3}[2048,2048] the Vass-proposed sum-product took 25.351.754 [us] excl. fillRandom()-ops

For grid{1,2,3}[2176,2176] the tested forall sum-product took 45.561.399 [us] incl. fillRandom()-ops

For grid{1,2,3}[2176,2176] the tested forall sum-product took 45.375.282 [us] excl. fillRandom()-ops

For grid{1,2,3}[2176,2176] the Vass-proposed sum-product took 41.304.391 [us] excl. fillRandom()-ops

--fast --ccflags -O3

For grid{1,2,3}[2176,2176] the tested forall sum-product took 39.680.133 [us] incl. fillRandom()-ops

For grid{1,2,3}[2176,2176] the tested forall sum-product took 39.494.035 [us] excl. fillRandom()-ops

For grid{1,2,3}[2176,2176] the Vass-proposed sum-product took 44.611.009 [us] excl. fillRandom()-ops

RESULTS:

On a single-locale ( single, virtualised processor, 2 cores, running all public time-constrained ( < 60 [s] ) time-shared public workloads online IDE ) device the still sub-optimal ( Vass' approach being further about 20% faster ) yields for grid scales 2048x2048 about ~ 4.17 x faster results than the Nvidia Jetson-hosted computing. This performance edge seems to further grow larger for larger memory layouts, as the scope of the memory-I/O grows and CPU-L1/L2/L3-cache pre-fetching will (ought follow the ~ O(n^3) scaling) further improve the CPU-based NUMA platforms' performance edge.

Would be great to see the achievable performance and ~ O(n^3) scaling obedience as being executed on multi-locale devices and native Cray NUMA cluster platforms.

use Random, Time, IO.FormattedIO;

var t1 : Timer;

var t2 : Timer;

t1.start(); //----------------------------------------------------------------[1]

config const size = 2048;

var grid1 : [1..size, 1..size] real;

var grid2 : [1..size, 1..size] real;

var grid3 : [1..size, 1..size] real;

fillRandom(grid1);

fillRandom(grid2);

t2.start(); //====================================[2]

forall i in 1..size {

forall j in 1..size {

forall k in 1..size {

grid3[i,j] += grid1[i,k] * grid2[k,j];

}

}

}

t2.stop(); //=====================================[2]

t1.stop(); //-----------------------------------------------------------------[1]

writef( "For grid{1,2,3}[%4i,%4i] the tested forall sum-product took %12i [us] incl. fillRandom()-ops\n",

size,

size,

t1.elapsed( TimeUnits.microseconds )

);

writef( "For grid{1,2,3}[%4i,%4i] the tested forall sum-product took %12i [us] excl. fillRandom()-ops\n",

size,

size,

t2.elapsed( TimeUnits.microseconds )

);

///////////////////////////////////////////////////////////////////////////////////

t1.clear();

t2.clear();

const D3 = {1..size, 1..size, 1..size};

t2.start(); //====================================[3]

forall (i,j,k) in D3 do

grid3[i,j] += grid1[i,k] * grid2[k,j];

t2.stop(); //=====================================[3]

writef( "For grid{1,2,3}[%4i,%4i] the Vass-proposed sum-product took %12i [us] excl. fillRandom()-ops\n",

size,

size,

t2.elapsed( TimeUnits.microseconds )

);

//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\//\\

// TiO.run platform uses 1 numLocales, having 2 physical CPU-cores accessible (numPU-s) with 2 maxTaskPar parallelism limit

writef( "TiO.run platform uses %3i numLocales, having %3i physical CPU-cores accessible (numPU-s) with %3i maxTaskPar parallelism limit\n",

numLocales,

here.numPUs( false, true ),

here.maxTaskPar

);