I tried to build a multi-classification model using lightGBM. After training the model, I parsed some data online and put it into my model for prediction.

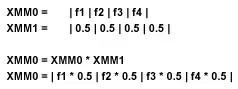

However, the result seems weird to me. I thought the nested array of my prediction means the probability for each class(I got 4 classes). The result of x-test (the data I used for validation) seems right. But the result of the data I scraped seems weird. It doesn't add up to 1.

In this post multiclass-classification-with-lightgbm, the prediction result didn't add up to 1 as well!

The 2 dataframes look the same to me, and I am using exactly the same model! Can someone pls tell me how to interpret the result or what have I done wrong?