I have a disparity image created with a calibrated stereo camera pair and opencv. It looks good, and my calibration data is good.

I need to calculate the real world distance at a pixel.

From other questions on stackoverflow, i see that the approach is:

depth = baseline * focal / disparity

Using the function:

setMouseCallback("disparity", onMouse, &disp);

static void onMouse(int event, int x, int y, int flags, void* param)

{

cv::Mat &xyz = *((cv::Mat*)param); //cast and deref the param

if (event == cv::EVENT_LBUTTONDOWN)

{

unsigned int val = xyz.at<uchar>(y, x);

double depth = (camera_matrixL.at<float>(0, 0)*T.at<float>(0, 0)) / val;

cout << "x= " << x << " y= " << y << " val= " << val << " distance: " << depth<< endl;

}

}

I click on a point that i have measured to be 3 meters away from the stereo camera. What i get is:

val= 31 distance: 0.590693

The depth mat values are between 0 and 255, the depth mat is of type 0, or CV_8UC1.

The stereo baseline is 0.0643654 (in meters).

The focal length is 284.493

I have also tried: (from OpenCV - compute real distance from disparity map)

float fMaxDistance = static_cast<float>((1. / T.at<float>(0, 0) * camera_matrixL.at<float>(0, 0)));

//outputDisparityValue is single 16-bit value from disparityMap

float fDisparity = val / (float)cv::StereoMatcher::DISP_SCALE;

float fDistance = fMaxDistance / fDisparity;

which gives me a (closer to truth, if we assume mm units) distance of val= 31 distance: 2281.27

But is still incorrect.

Which of these approaches is correct? And where am i going wrong?

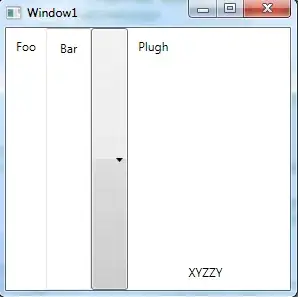

Left, Right, Depth map. (EDIT: this depth map is from a different pair of images)

EDIT: Based on an answer, i am trying this:

`std::vector pointcloud;

float fx = 284.492615;

float fy = 285.683197;

float cx = 424;// 425.807709;

float cy = 400;// 395.494293;

cv::Mat Q = cv::Mat(4,4, CV_32F);

Q.at<float>(0, 0) = 1.0;

Q.at<float>(0, 1) = 0.0;

Q.at<float>(0, 2) = 0.0;

Q.at<float>(0, 3) = -cx; //cx

Q.at<float>(1, 0) = 0.0;

Q.at<float>(1, 1) = 1.0;

Q.at<float>(1, 2) = 0.0;

Q.at<float>(1, 3) = -cy; //cy

Q.at<float>(2, 0) = 0.0;

Q.at<float>(2, 1) = 0.0;

Q.at<float>(2, 2) = 0.0;

Q.at<float>(2, 3) = -fx; //Focal

Q.at<float>(3, 0) = 0.0;

Q.at<float>(3, 1) = 0.0;

Q.at<float>(3, 2) = -1.0 / 6; //1.0/BaseLine

Q.at<float>(3, 3) = 0.0; //cx - cx'

//

cv::Mat XYZcv(depth_image.size(), CV_32FC3);

reprojectImageTo3D(depth_image, XYZcv, Q, false, CV_32F);

for (int y = 0; y < XYZcv.rows; y++)

{

for (int x = 0; x < XYZcv.cols; x++)

{

cv::Point3f pointOcv = XYZcv.at<cv::Point3f>(y, x);

Eigen::Vector4d pointEigen(0, 0, 0, left.at<uchar>(y, x) / 255.0);

pointEigen[0] = pointOcv.x;

pointEigen[1] = pointOcv.y;

pointEigen[2] = pointOcv.z;

pointcloud.push_back(pointEigen);

}

}`

And that gives me a cloud.