I have this query on the order lines table. Its a fairly large table. I am trying to get quantity shipped by item in the last 365 days. The query works, but is very slow to return results. Should I use a function based index for this? I read a bit about them, but havent work with them much at all.

How can I make this query faster?

select OOL.INVENTORY_ITEM_ID

,SUM(nvl(OOL.shipped_QUANTITY,0)) shipped_QUANTITY_Last_365

from oe_order_lines_all OOL

where ool.actual_shipment_date>=trunc(sysdate)-365

and cancelled_flag='N'

and fulfilled_flag='Y'

group by ool.inventory_item_id;

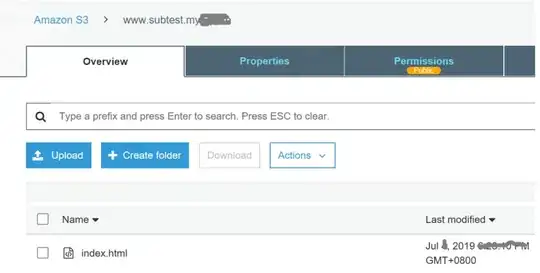

Explain plan:

Stats are up to date, we regather once a week.

Query taking 30+ minutes to finish.

UPDATE

After adding this index:

The explain plan shows the query is using index now:

The query runs faster but not 'fast.' Completing in about 6 minutes.

UPDATE2

I created a covering index as suggested by Matthew and Gordon:

The query now completes in less than 1 second.

I still wonder why or if a function-based index would have also been a viable solution, but I dont have time to play with it right now.