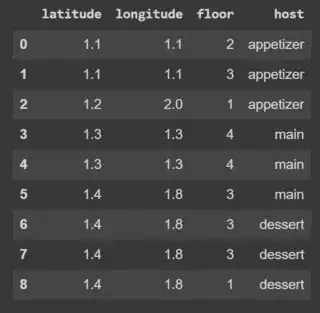

I have a long dataframe of (about 400) people who participate in an event. You see the first 9 rows below. This event is about cooking at home. As a host, people come to your place and you will cook either appetizer, main dish or dessert for your guests. The location of each host is given by the latitude, longitude and the floor:

d = {'latitude': [1.1, 1.1,1.2,1.3,1.3,1.4,1.4,1.4,1.4],

'longitude': [1.1, 1.1, 2, 1.3, 1.3, 1.8, 1.8, 1.8, 1.8],

'floor': [2,3,1,4,4,3,3,3,1],

'host': ['appetizer', 'appetizer', 'appetizer', 'main', 'main', 'main', 'dessert', 'dessert', 'dessert']}

df = pd.DataFrame(data=d)

The length of the df is always a multiple of 9. For example 27. The dataframe columns are already sorted by size, meaning that people who live close together (share same spatial coordinates) are closer in index value.

Goal:

- I want to divide the df in clusters of 9 people. (This is why length of df is multiple of 9).

- In each cluster I want 3 people to prepare the appetizer, main dish and dessert, respectively.

This itself is simple, as you can already see in my dataframe. The challenge comes with the constraint.

Constraint:

- People who share the same dish (appetizer, main, dessert) should ideally not live at the same spatial coordinates (lat., lon., floor). Of course this is not always possible, then this constraint can be neglected.

In the example index 0, 1, 3 are correct since all live at different places. However, index 3 and 4 live together as well as index 6 and 7. Therefore, the choice of setting the hosts values like it is, was not smart.

Any idea how to set the column "host" so that the constraint is fulfilled?

EDIT 3:

import pandas as pd

from math import radians, sin, cos, atan2, sqrt

from random import random

def distance(lat1, lon1, lat2, lon2):

R = 6373.0 # approximate radius of earth in km

lat1 = radians(lat1)

lon1 = radians(lon1)

lat2 = radians(lat2)

lon2 = radians(lon2)

dlon = lon2 - lon1

dlat = lat2 - lat1

a = sin(dlat / 2)**2 + cos(lat1) * cos(lat2) * sin(dlon / 2)**2

c = 2 * atan2(sqrt(a), sqrt(1 - a))

distance = (R * c)

return distance # in unit 'kilometers'

d = {

'latitude': [1.1,1.2,1.1, 1.3,1.3,1.4,1.4,1.4,1.4],

'longitude': [1.1, 2, 1.1, 1.3, 1.3, 1.8, 1.8, 1.8, 1.8],

'floor': [3,1,2,2,4,3,2,1,4],

'id': [1,2,3,4,5,6,7,8,9]

}

df = pd.DataFrame(data=d)

df.set_index('id', drop=False, inplace=True)

df['host'] = 'None'

df['group'] = 'None'

max_distance = 85

# emptyGroupList = QUERY: "SELECT people WHERE group = None ORDER BY lat,long, floor"

emptyGroupList = df.loc[df['host'] == 'None'].sort_values(['latitude', 'longitude', 'floor'], ascending=True)

print(emptyGroupList)

group = []

groupNumber = 0

for index, person in df.iterrows():

if len(group) == 3:

for group_person in group:

df.at[group_person['id'], 'group'] = groupNumber

groupNumber += 1

group = []

if len(group) == 0:

group.append(person)

else:

for group_person in group:

#print(group_person['floor'])

dist = distance(person['latitude'], person['longitude'], group_person['latitude'], group_person['longitude'])

if group_person['floor'] == 1:

dist=dist+1

elif group_person['floor'] == 2:

dist=dist+2

elif group_person['floor'] == 3:

dist=dist+3

elif group_person['floor'] == 4:

dist=dist+4

else:

dist=dist+5

if 0 < dist <= max_distance:

group.append(person)

print(df)

- Note, that I added the line group=[ ] to your code-example!

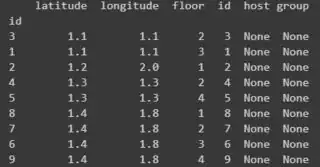

This is how the data looks at the start:

After applying the algorithm the first time:

- This looks wrong since index 4 and 5 share same location. It is strange, because we actually say in the code, that the distance should be larger 0.

- Applying the for-loop multiple times does not effect all person in the group. That means, at the end, people are without a group. My goal is, that every person gets a group (appertizer, main, dessert).

- Maybe it is better to wrapp everything into a function and apply it multiple times until condition is satisfied? But before doing that, issue (1) and (2) need to be solved.

- You said there are 2 ways to include a new person to a group. I do not have any preference. The simples way is the best way.