Writing a shader for Unity and I don't understand what is happening here. I have a sphere with a material attached. Material has a shader attached. Shader is very simple, it generates some simplex noise, then uses that as the color for the sphere.

Shader code:

Shader "SimplexTest"

{

Properties

{

_SimplexScale("Simplex Scale", Vector) = (4.0,4.0,4.0,1.0)

}

SubShader

{

Tags { "RenderType"="Opaque" "Queue"="Geometry" }

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

#include "SimplexNoise3D.hlsl"

float3 _SimplexScale;

struct appdata

{

float4 vertex : POSITION;

};

struct v2f

{

float4 position : SV_POSITION;

float4 color : COLOR0;

};

v2f vert (appdata v)

{

v2f o;

float4 worldPosition = mul(unity_ObjectToWorld, v.vertex);

o.position = UnityObjectToClipPos(v.vertex);

float small = snoise(_SimplexScale * worldPosition);

// o.color = small * 10; // Non-Saturated (A) Version

o.color = saturate(small * 10); // Saturated (B) Version

return o;

}

fixed4 frag (v2f i) : SV_Target

{

return i.color;

}

ENDCG

}

}

}

The simplex noise function is from Keijiro's Github.

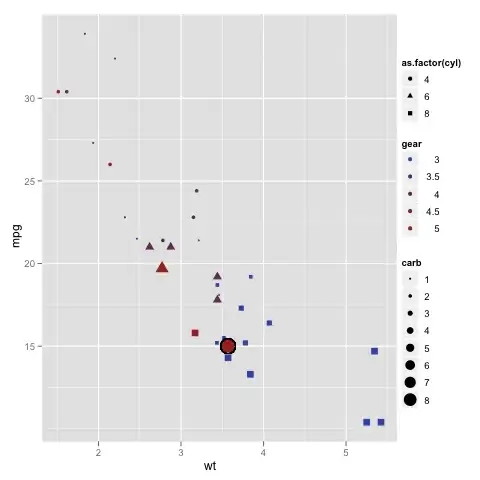

The non-saturated version (Version A) is as expected. By multiplying the simplex noise by a factor of 10, the result is a series of white and black splotches. Saturate (Version B) should theoretically just chop all the white splotches off at 1 and the black ones at 0. But it seems to be creating a whole new gradient and I don't know why.

I assume I'm missing some key assumption, but it isn't clear to me what that would be, since the math seems correct.