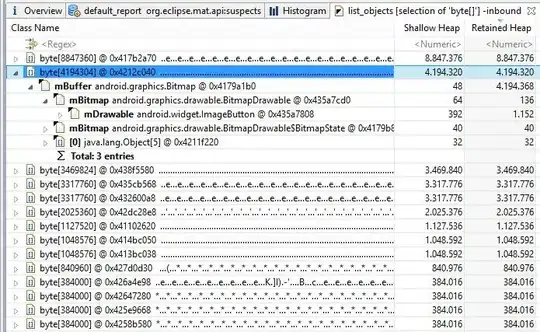

First, TF would always allocate most if not all available GPU memory when it starts. It actually allows TF to use memory more effectively. To change this behavior one might want to set an environment flag export TF_FORCE_GPU_ALLOW_GROWTH=true. More options are available here.

Once you've done that, nvidia-smi would still report exaggerated memory usage numbers. Because TF nvidia-smi reports allocated memory, while profiler reports actual peak memory being in use.

BFC is used as memory allocator. Whenever TF runs out of, say, 4GB of memory it would allocate twice the amount of 8GB. Next time it would try to allocate 16GB. At the same time the program might only use 9GB of memory on pick, but 16GB allocation would be reported by nvidia-smi. Also, BFC is not the only thing that allocates GPU memory in tensorflow, so, it can actually use 9GB+something.

Another comment here would be, that tensorflow native tools for reporting memory usage were not particularly precise in the past. So, I would allow myself to say, that profiler might actually be somewhat underestimating peak memory usage.

Here is some info on memory management https://github.com/miglopst/cs263_spring2018/wiki/Memory-management-for-tensorflow

Another a bit advanced link for checking memory usage: https://github.com/yaroslavvb/memory_util