I looked through some old questions trying to find if someone had encountered this, but didn't find any that were relevant, so my question is this:

I have some code that runs fine before I use Cython to compile it, but after using Cython it exits between the first process and the second.

My code looks like:

import dummyC as dummy

import numpy as np

import math

import logging

import time

import concurrent.futures as cf

from multiprocessing import freeze_support

start=time.time()

format = "%(asctime)s: %(message)s"

logging.basicConfig(format=format, level=logging.INFO, datefmt="%H:%M:%S")

logging.info("Here we go!")

#path1="Y:/data/remotesensing/satellite/VIIRS/L1B/Pilot Areas/Fire/Observation/VNP02IMG.A2012164.1742.001.2017287025318.nc"

#path2="Y:/data/remotesensing/satellite/VIIRS/L1B/Pilot Areas/Fire/Geolocation/VNP03IMG.A2012164.1742.001.2017287005326.nc"

path1= "Y:/data/remotesensing/satellite/VIIRS/L1B/Pilot Areas/Fire/Observation/VNP02IMG.A2012152.1000.001.2017287002855.nc"

path2= "Y:/data/remotesensing/satellite/VIIRS/L1B/Pilot Areas/Fire/Geolocation/VNP03IMG.A2012152.1000.001.2017286230211.nc"

fd = dummy.fireData(path1,path2)

def thread_me(arr):

#The Algorithm here is proprietary, but also not the issue as it works pre-Cython

x=1 #Dummy code that produces the same issue

def start():

if __name__ == '__main__':

freeze_support()

arg=[[0,lines//4,0,pix//4],[0,lines//4,pix//4,pix//2],[0,lines//4,pix//2,(pix//4+pix//2)],[0,lines//4,(pix//4+pix//2),pix],

[lines//4,lines//2,0,pix//4],[lines//4,lines//2,pix//4,pix//2],[lines//4,lines//2,pix//2,(pix//4+pix//2)],[lines//4,lines//2,(pix//4+pix//2),pix],

[lines//2,(lines//2+lines//4),0,pix//4],[lines//2,(lines//2+lines//4),pix//4,pix//2],[lines//2,(lines//2+lines//4),pix//2,(pix//4+pix//2)],[lines//2,(lines//2+lines//4),(pix//4+pix//2),pix],

[(lines//2+lines//4),lines,0,pix//4],[(lines//2+lines//4),lines,pix//4,pix//2],[(lines//2+lines//4),lines,pix//2,(pix//4+pix//2)],[(lines//2+lines//4),lines,(pix//4+pix//2),pix]]

with cf.ProcessPoolExecutor(max_workers=4) as executor:

output = executor.map(thread_me, arg)

executor.shutdown(wait=True)

output = np.array(list(output))

numFire=0

file = open("NoFirePoints.txt","w")

for i in range(len(output)):

for j in range(len(output[i])):

numFire+=1

print(output[i][j][0],output[i][j][1],output[i][j][2])

tempStr = ' '.join([str(elem) for elem in output[i][j]])

tempStr = tempStr+'\n'

file.write(tempStr)

print(fd.firePoints,"Fire points")

logging.info("%d fires detected"%(numFire))

file.close()

print(str((time.time()-start)/60))

start()

And then I call start() from another program so I can compile this one. Any ideas as to why it exits after the first iteration?

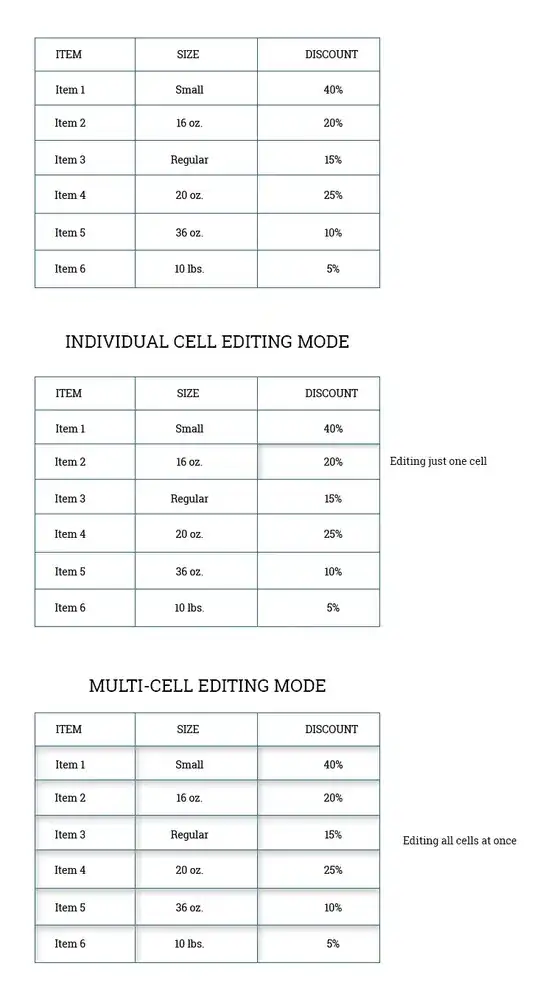

Image of what my execution looks like:

Note that the "Here we go" is a trace, and will be removed as soon as I figure out why it won't execute further. Each message is from a process starting. Also that Untitled0.py is the program running the compiled version and FireDetection.py is the uncompiled version

Thanks in advance for your help!