An ULTRA pipeline is always running enabling it to consume documents continuously from external sources without increasing latency.

One of the solutions I've tried is stopping the instance and invoking them again using the REST Post Snap, however, it's been unsuccessful so far.

This, however, defeats the purpose of an ULTRA task.

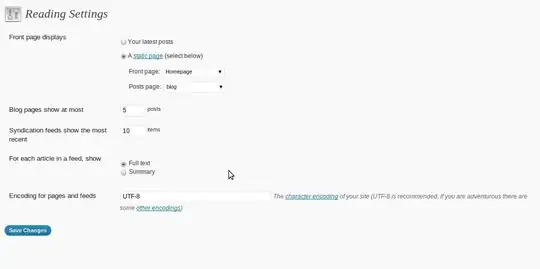

You can get the snap wise execution timings from the dashboard. Please refer to the following screenshot.

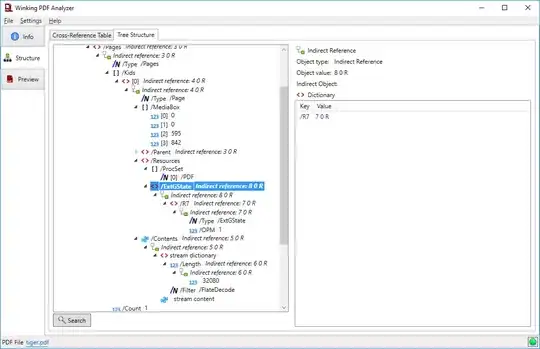

Another thing you can get from the dashboard is the logs for a particular run of the ULTRA.

To display the following details about Pipeline Execution Runtime Logs

for each Task, click  in the

status column.

in the

status column.

Note: Logs keep rolling to backup based on the number of pipelines and the size configured in the snaplex properties.

References:

Custom logging for capturing timings

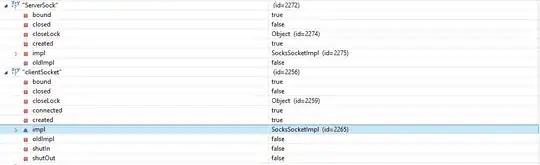

But if you want to have the timings in a log from which you can generate a report then you have to add it to you ULTRA pipeline logic (or utilize and consume SnapLogic logging APIs similar to what you have done to enable/disable your ULTRA task).

We have similar requirements where we get the timings of each snap execution and then dump them in a file which we can then read/process as and when required. This gave us more control as to what we want to log and how we want the data to look like. The only drawback is that it also adds a lot of complexity to the code.

A few points to remember regarding this approach:

- Do not use a File Writer snap in your ULTRA pipeline as you might lose lineage information and cause the pipeline to fail

- Create a simple lightweight common pipeline that will do the logging and then use a Pipeline Execute snap in your ULTRA pipeline to utilize this common pipeline

- Do not reuse the same execution of the logging pipeline

- Pass the log information to this pipeline as a pipeline parameter as opposed to passing it directly to an open input view; do not keep the input and output of the common pipeline open

- Also, make sure you capture the pipeline parameter in the common pipeline so that you can view the captured parameter in the dashboard