To obtain the 'kernel density estimation', scipy.stats.gaussian_kde calculates a function to fit the data.

To just draw a Gaussian normal curve, there is [scipy.stats.norm]. Subtracting the mean and dividing by the standard deviation, adapts the position to the given data.

Both curves would be drawn such that the area below the curve sums to one. To adjust them to the size of the histogram, these curves need to be scaled by the length of the data times the bin-width. Alternatively, this scaling can stay at 1, and the histogram scaled by adding the parameter hist(..., density=True).

In the demo code the data is mutilated to illustrate the difference between the kde and the Gaussian normal.

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as stats

x = np.linspace(-4,4,1000)

N = 10000

z1 = np.random.randint(1, 3, N) * np.random.uniform(0, .4, N)

z2 = np.random.uniform(0, 1, N)

R_sq = -2 * np.log(z1)

theta = 2 * np.pi * z2

z1 = np.sqrt(R_sq) * np.cos(theta)

z2 = np.sqrt(R_sq) * np.sin(theta)

fig = plt.figure(figsize=(12,4))

for ind_subplot, zi, col in zip((1, 2), (z1, z2), ('crimson', 'dodgerblue')):

ax = fig.add_subplot(1, 2, ind_subplot)

ax.hist(zi, bins=40, range=(-4, 4), color=col, label='histogram')

ax.set_xlabel("z"+str(ind_subplot))

ax.set_ylabel("frequency")

binwidth = 8 / 40

scale_factor = len(zi) * binwidth

gaussian_kde_zi = stats.gaussian_kde(z1)

ax.plot(x, gaussian_kde_zi(x)*scale_factor, color='springgreen', linewidth=3, label='kde')

std_zi = np.std(zi)

mean_zi = np.mean(zi)

ax.plot(x, stats.norm.pdf((x-mean_zi)/std_zi)*scale_factor, color='black', linewidth=2, label='normal')

ax.legend()

plt.show()

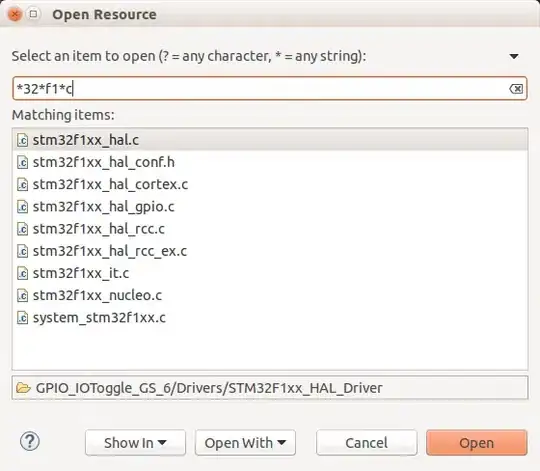

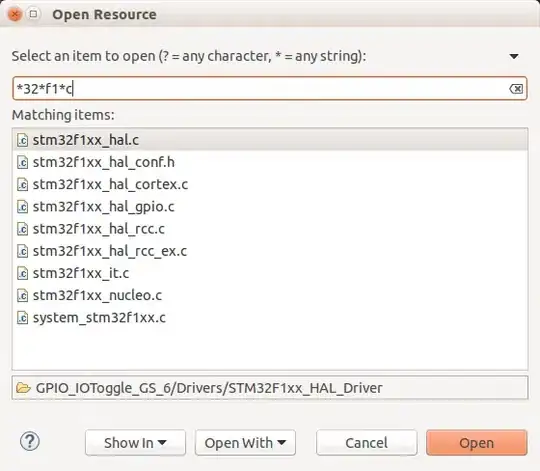

The original values for z1 and z2 very much resemble a normal distribution, and so the black line (the Gaussian normal for the data) and the green line (the KDE) very much resemble each other.

The current code first calculates the real mean and the real standard deviation of the data. As you want to mimic a perfect Gaussian normal, you should compare to the curve with mean zero and standard deviatio one. You'll see they're almost identical on the plot.