We have 10 kafka machines with kafka version - 1.X

this kafka cluster version is part of HDP version - 2.6.5

We noticed that under /var/log/kafka/server.log the following message

ERROR Error while accepting connection {kafka.network.Accetpr}

java.io.IOException: Too many open files

We saw also additionally

Broker 21 stopped fetcher for partition ...................... because they are in the failed log dir /kafka/kafka-logs {kafka.server.ReplicaManager}

and

WARN Received a PartitionLeaderEpoch assignment for an epoch < latestEpoch. this implies messages have arrived out of order. New: {epoch:0, offset:2227488}, Currnet: {epoch 2, offset:261} for Partition: cars-list-75 {kafka.server.epochLeaderEpocHFileCache}

so regarding to the issue -

ERROR Error while accepting connection {kafka.network.Accetpr}

java.io.IOException: Too many open files

how to increase the MAX open files , in order to avoid this issue

update:

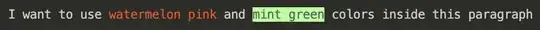

in ambari we saw the following parameter from kafka --> config

is this is the parameter that we should to increase?