I'm using a GPU on Google Colab to run some deep learning code.

I have got 70% of the way through the training, but now I keep getting the following error:

RuntimeError: CUDA out of memory. Tried to allocate 2.56 GiB (GPU 0; 15.90 GiB total capacity; 10.38 GiB already allocated; 1.83 GiB free; 2.99 GiB cached)

I'm trying to understand what this means. Is it talking about RAM memory? If so, the code should just run the same as is has been doing shouldn't it? When I try to restart it, the memory message appears immediately. Why would it be using more RAM when I start it today than it did when I started it yesterday or the day before?

Or is this message about hard disk space? I could understand that because the code saves things as it goes on and so the hard disk usage would be cumulative.

Any help would be much appreciated.

So if it's just the GPU running out of memory - could someone explain why the error message says 10.38 GiB already allocated - how can there be memory already allocated when I start to run something. Could that be being used by someone else? Do I just need to wait and try again later?

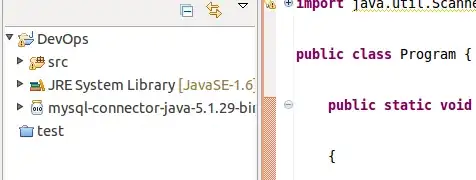

Here is a screenshot of the GPU usage when I run the code, just before it runs out of memory:

I found this post in which people seem to be having similar problems. When I run a code suggested on that thread I see:

Gen RAM Free: 12.6 GB | Proc size: 188.8 MB

GPU RAM Free: 16280MB | Used: 0MB | Util 0% | Total 16280MB

which seems to suggest there is 16 GB of RAM free.

I'm confused.