[{'complete': True, 'volume': 116, 'time': '2020-01-17T19:15:00.000000000Z', 'mid': {'o': '1.10916', 'h': '1.10917', 'l': '1.10906', 'c': '1.10912'}}, {'complete': True, 'volume': 136, 'time': '2020-01-17T19:30:00.000000000Z', 'mid': {'o': '1.10914', 'h': '1.10922', 'l': '1.10908', 'c': '1.10919'}}, {'complete': True, 'volume': 223, 'time': '2020-01-17T19:45:00.000000000Z', 'mid': {'o': '1.10920', 'h': '1.10946', 'l': '1.10920', 'c': '1.10930'}}, {'complete': True, 'volume': 203, 'time': '2020-01-17T20:00:00.000000000Z', 'mid': {'o': '1.10930', 'h': '1.10931', 'l': '1.10919', 'c': '1.10928'}}, {'complete': True, 'volume': 87, 'time': '2020-01-17T20:15:00.000000000Z', 'mid': {'o': '1.10926', 'h': '1.10934', 'l': '1.10922', 'c': '1.10926'}}, {'complete': True, 'volume': 102, 'time': '2020-01-17T20:30:00.000000000Z', 'mid': {'o': '1.10926', 'h': '1.10928', 'l': '1.10913', 'c': '1.10920'}}, {'complete': True, 'volume': 277, 'time': '2020-01-17T20:45:00.000000000Z', 'mid': {'o': '1.10918', 'h': '1.10929', 'l': '1.10913', 'c': '1.10928'}}, {'complete': True, 'volume': 103, 'time': '2020-01-17T21:00:00.000000000Z', 'mid': {'o': '1.10927', 'h': '1.10929', 'l': '1.10920', 'c': '1.10924'}}, {'complete': True, 'volume': 54, 'time': '2020-01-17T21:15:00.000000000Z', 'mid': {'o': '1.10926', 'h': '1.10926', 'l': '1.10910', 'c': '1.10912'}}, {'complete': False, 'volume': 15, 'time': '2020-01-17T21:30:00.000000000Z', 'mid': {'o': '1.10913', 'h': '1.10918', 'l': '1.10912', 'c': '1.10913'}}]

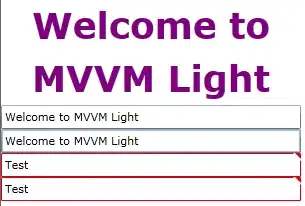

I try to take out all the "time" and "mid" from this list. In the 'mid', there are 'o','h','l','c'dictionaries. is there any way to combine 'time' and these dictionaries into a dataframe?