I have hundreds of Excel files with hundreds of tables.

I want to use python to automatically detect these tables and convert them to pandas dataframes.

Some key facts about these tables:

- These tables are not named.

- The positioning of these tables are also not known

- One sheet could have multiple tables (usually separated by a few blank lines )

- there could be annotations

- a table will always have a cluster of cells with values and rows and column labels

- the column labels might be on 1 row or 2 rows (think of nested headers in pandas df)

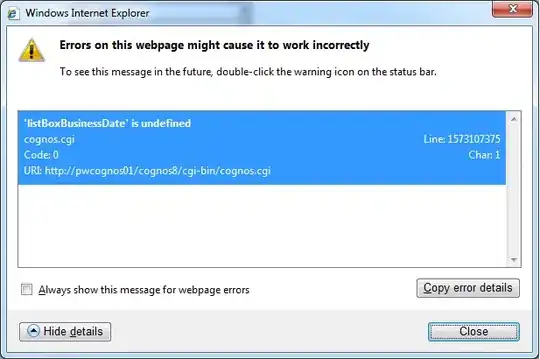

Here is an example of what the table look like.

Here is my proposed solution (I would like your input on this):

- start from the bottom right hand corner of a given sheet in excel (so working from bottom up)

- search for a cluster of cells with values in it. Say you find 4 cells (2 above and 2 below) with numbers in it. This would indicate that you have landed on a table

- you would then figure out the dimensions of this table, so the top left and bottom right, this would be the values of the df

- you would then figure out how many levels there are in the columns/header and extract the labels

- then you would figure out how many levels there are in the rows/index and extract the labels

- then move to the next table and repeat

What library to use?

- xlrd: very fast but does not read cell formatting for .xlsx files. It does provide cell format information for .xls files but not .xlsx. We are focused on .xlsx.

- openpyxl: very slow, uses tons of memory because it loads everything into memory. It does provide cell formatting information.

During my research I across these two questions which are similar but the solutions proposed are VBA based, whereas I need a purely python solution.