I am trying to scrape intraday prices for a company, using this website:Enel Intraday

When the website pulls the data, it splits them into few hundreds pages, which makes it very time consuming to pull the data from. Using insomnia.rest (for the first time), i have been trying to play with the URL GET or try and find the actual javascrip function that returns these table values but without success.

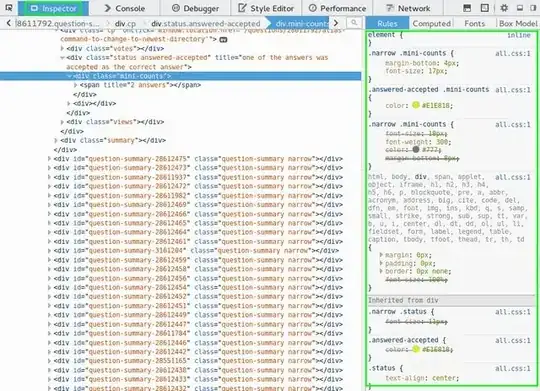

Having inspected the search button, i find that the JS function is called "searchIntraday" and use a form as input called "intraday_form".

I am basically trying to get the following data in 1 call rather having to go through all tab pages, so a full day would look like this:

Time Last Trade Price Var % Last Volume Type

5:40:49 PM 7.855 -2.88 570 AT

5:38:17 PM 7.855 -2.88 300 AT

5:37:10 PM 7.855 -2.88 290 AT

5:36:06 PM 7.855 -2.88 850 AT

5:35:56 PM 7.855 -2.88 14,508,309 UT

5:29:59 PM 7.872 -2.67 260 AT

5:29:59 PM 7.871 -2.68 4,300 AT

5:29:59 PM 7.872 -2.67 439 AT

5:29:59 PM 7.872 -2.67 3,575 AT

5:29:59 PM 7.87 -2.7 1,000 AT

5:29:59 PM 7.87 -2.7 1,000 AT

5:29:59 PM 7.87 -2.7 1,000 AT

5:29:59 PM 7.87 -2.7 4,000 AT

5:29:59 PM 7.87 -2.7 300 AT

5:29:59 PM 7.87 -2.7 2,000 AT

5:29:59 PM 7.87 -2.7 200 AT

5:29:59 PM 7.87 -2.7 400 AT

5:29:59 PM 7.87 -2.7 500 AT

5:29:59 PM 7.872 -2.67 1,812 AT

5:29:59 PM 7.872 -2.67 5,000 AT

..................................................

Time Last Trade Price Var % Last Volume Type

9:00:07 AM 8.1 0.15 933,945 UT

which for that day is iterating from page 1 to page 1017!

I looked at the below page for help: