So I'm trying to perform 2D image convolution using a 3x3 horizontal kernel that looks like this,

horizontal = np.array([

[0, 0, 0],

[-1, 0, 1],

[0, 0, 0]]),

So I am using the below convolution function, where I'm looping through the image, starting by ignoring the first few pixels (size of the kernel) and multiplying and adding,

def perform_convolution(k_h, k_w, img_h, img_w, kernel, picture):

# k_w = kernel width, k_h = kernel_height

# img_h = image height, img_w = image width

conv = np.zeros(picture.shape)

for i in range(k_h, img_h - (k_h)):

for j in range(k_w, img_w - (k_w)):

tot = 0

for m in range(k_h):

for n in range(k_w):

tot = tot + kernel[m][n] * picture[i - (k_h) + m][j - (k_w) + n]

conv[i][j] = tot

return conv

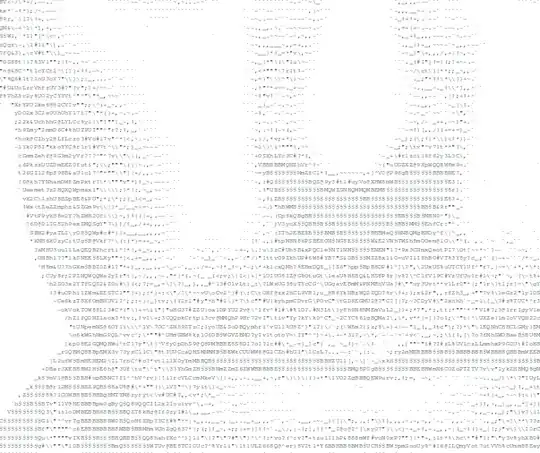

But the output that I get is, is completely weird, as shown below

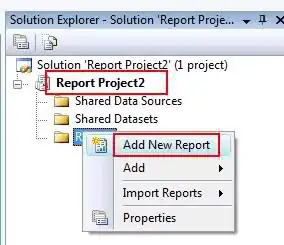

Alternatively, by using the kernel from PIL, I get a proper blurred image like this,

So can anyone help me figure out where I'm going wrong?

I have tried the same function with box kernels and it works just fine, but I'm not being able to figure out why the output of this is so weird.

I have also tried to separate the RGB bands and convolve them separately but with no result.

The origin image is this,