I'm storing country names in a sqlite database exposed via a cpprest server. These country names are being queried by my web application, and the results returned by the server are raw binary strings (octet streams) that have the name length and the actual characters of the name embedded within.

I'm reading the country names into a std::string value like so:

country->Label = std::string((const char*)sqlite3_column_text(Query.Statement, 1));

I then copy them into a std::vector<char> buffer which is then sent back via the cpprest API through

Concurrency::streams::bytestream::open_istream<std::vector<char>>(buffer);

When my web application receives the data, I decode it like so:

var data = new Uint8Array(request.response);

var dataView = new DataView(data.buffer);

var nameLength = dataView.getUint32(0, true);

var label = "";

for(var k = 0 ; k < nameLength; k++)

{

label += String.fromCharCode([dataView.getUint8(k + 4)])

}

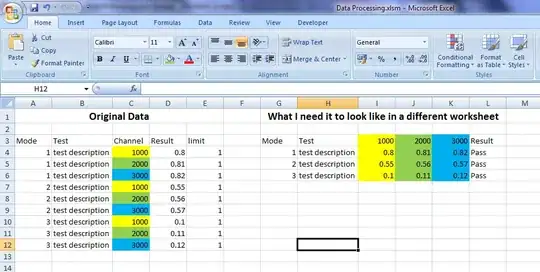

For the most part, this works fine, up until I encounter a country name that contains non ASCII characters, then I get this abomination:

My understanding of UTF-8 is that it stores ASCII characters as normal, but non-ASCII characters across multiple bytes.

Which part of my application stack needs to be told when and where to use the multiple bytes for the non-ASCII characters and how would I go about doing that? My guess is, that since the web application is the one thats showing the text, this is where the change needs to happen, but I'm uncertain of how to do it.

Edit: Just to clarify, I have attempted the provided answers, but they don't seem to work either:

var labelArray = data.subarray(4, 4 + nameLength);

var label = new TextDecoder("utf-8").decode(labelArray);

which results in this: