There are different methods

Chunk up the dataset (saves time in future but needs initial time invest)

Chunking allows you to ease up many operations such as shuffling and so on.

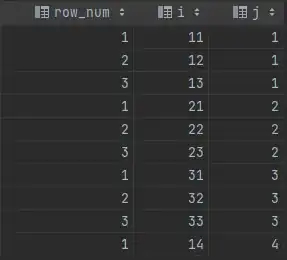

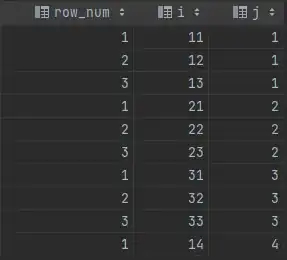

Make sure each subset/chunk is representative of the whole Dataset. Each chunk file should have the same amount of lines.

This can be done by appending a line to one file after another. Quickly, you will realize that it's inefficient to open each file and write a line. Especially while reading and writing on the same drive.

-> add Writing and Reading buffer which fits into memory.

Choose a chunksize that fits your needs. I choose this particular size because my default text editor can still open it fairly quickly.

Smaller chunks can boost performance especially if you want to get metrics such as class ditribution because you only have to loop through one representative file to get an estimation of the whole dataset which might be enough.

Bigger chunkfiles do have a better representation of the whole dataset in each file but you could as well just go through x smaller chunkfiles.

I do use c# for this because I am way more experienced there and thus I can use the full featureset such as splitting the tasks reading / processing / writing onto different threads.

If you are experienced using python or r, I suspect there should be simillar functionalities as well. Parallelizing might be a huge factor on such large Datasets.

Chunked datasets can be modeled into one interleaved dataset which you can process with tensor processing units. That would probably yield one of the best performances and can be executed locally as well as in the cloud on the really big machines. But this requires a lot of learning on tensorflow.

Using a reader and read the file step by step

instead of doing something like all_of_it = file.read() you want to use some kind of streamreader. The following function reads through one of the chunk files (or your whole 300gb dataset) line by line to count each class within the file. By processing one line at a time, your program will not overflow the memory.

you might want to add some progress indication such as X lines/s or X MBbs in order to make an estimation of the total process time.

def getClassDistribution(path):

classes = dict()

# open sample file and count classes

with open(path, "r",encoding="utf-8",errors='ignore') as f:

line = f.readline()

while line:

if line != '':

labelstring = line[-2:-1]

if labelstring == ',':

labelstring = line[-1:]

label = int(labelstring)

if label in classes:

classes[label] += 1

else:

classes[label] = 1

line = f.readline()

return classes

I use a combination of chunked datasets and estimation.

Pitfalls for performance

whenever possible, avoid nested loops. Each loop inside another loop multiplies the complexity by nwhenever possible, process the data in one go. Each loop after another adds a complexity of n- if your data comes in csv format, avoid premade functions such as

cells = int(line.Split(',')[8]) this will lead very quickly to a memory throughput bottleneck. One proper example of this can be found in getClassDistributionwhere I only want to get the label.

the following C# function splits a csv line into elements ultra fast.

// Call function

ThreadPool.QueueUserWorkItem((c) => AnalyzeLine("05.02.2020,12.20,10.13").Wait());

// Parralelize this on multiple cores/threads for ultimate performance

private async Task AnalyzeLine(string line)

{

PriceElement elementToAdd = new PriceElement();

int counter = 0;

string temp = "";

foreach (char c in line)

{

if (c == ',')

{

switch (counter)

{

case 0:

elementToAdd.spotTime = DateTime.Parse(temp, CultureInfo.InvariantCulture);

break;

case 1:

elementToAdd.buyPrice = decimal.Parse(temp);

break;

case 2:

elementToAdd.sellPrice = decimal.Parse(temp);

break;

}

temp = "";

counter++;

}

else temp += c;

}

// compare the price element to conditions on another thread

Observate(elementToAdd);

}

Create a database and load the data

when processing csv like data you can load the data into a Database.

Databases are made to accommodate for huge amount of data and you can expect very high performance.

A Database will likely use up way more space on your disk than raw data. This is one reason why I moved away from using a database.

Hardware Optimisations

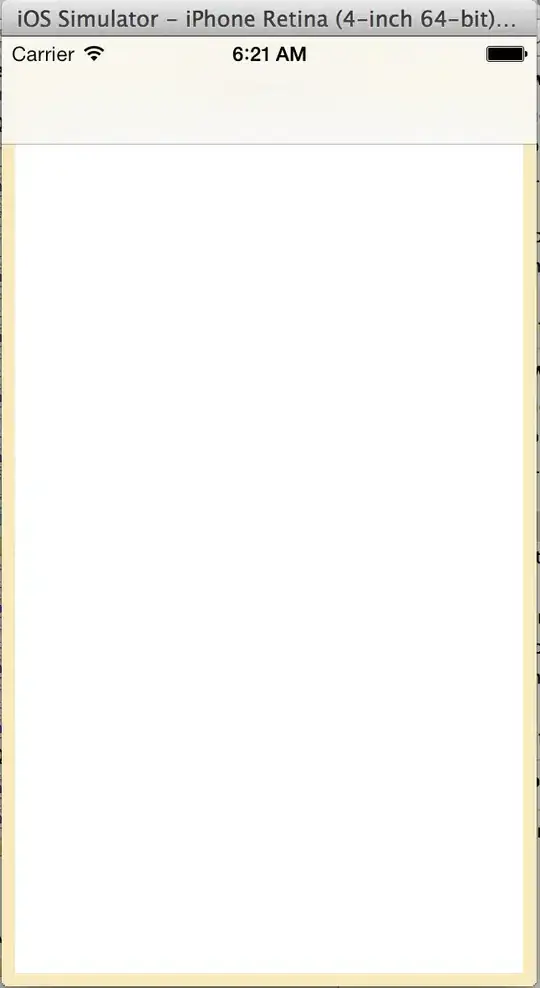

If your code is optimized well your bottleneck will most likely be the hard drive throughput.

- If the Data fits onto your local hard drive, use it locally as this will get rid of network latencies (imagine 2-5ms for each record in a local network and 10-100ms in remote locations).

- Use a modern Harddrive. A 1tb NVME SSD costs around 130 today (intel 600p 1tb). An nvme ssd is using pcie and is around 5 times faster than a normal ssd and 50 times faster than a normal hard drive, especially when writing to different locations fastly (chunking up data). SSDs have cought up vastly in capacity in the recent years and for such a task it would be savage.

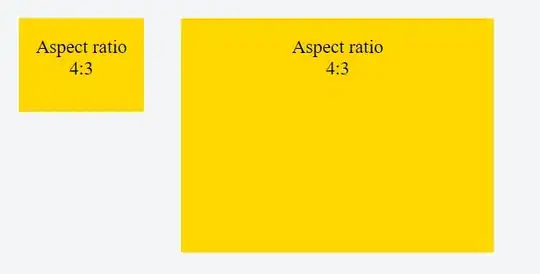

The following screenshot provides a performance comparisation of tensorflow training with the same data on the same machine. Just one time saved locally on a standard ssd and one time on a network attached storage in the local network (normal hard disk).