I'm working on fitting a multi-level logistic regression model with group level predictors. I am using JAGS via R. I am getting different behaviors when I fit the model with the runjags versus the R2Jags packages.

I've tried to write a reproducible example that shows the issue. Below, I simulate data from a binomial model, index the data to 8 plots and 2 blocks, and then fit a multi-level logistic regression to recover the success probabilities (b1 and b2) in the code below. Scroll to the bottom to see the summaries of the two fits.

My question is:

- Why are the posteriors from these two fits different? I am using the same data, a single model specification, and setting the random number generator before each. Why does the mean of the posteriors differ, and why are the Rhat values so different?

# -------------------------------------------------------------------

# Loading required packages

# -------------------------------------------------------------------

library(rjags)

library(R2jags)

library(MCMCvis)

Package version information:

jags.version()

[1] ‘4.3.0’

R2jags_0.5-7 MCMCvis_0.13.5 rjags_4-10

# -------------------------------------------------------------------

# Simulate data

# -------------------------------------------------------------------

set.seed(10)

N.plots = 8

N.blocks = 2

trials=400

n = rep(100,trials)

N=length(n)

plotReps=N/N.plots

blockReps=N/N.blocks

# Block 1

b1<-rep(c(.25,.75,.9,.1),each=plotReps)-.05

# Block 2

b2<-rep(c(.25,.75,.9,.1),each=plotReps)+.05

y = rbinom(trials, 100, p = c(b1,b2))

# vectors indexing plots and blocks

plot = rep(1:8,each=plotReps)

block = rep(1:2,each=blockReps)

# pass data to list for JAGS

data = list(

y = y,

n = n,

N = length(n),

plot = plot,

block= block,

N.plots = N.plots,

N.blocks = N.blocks

)

# -------------------------------------------------------------------

# Code for JAGS model

# -------------------------------------------------------------------

modelString <- "model {

## Priors

# hyperpriors

mu.alpha ~ dnorm(0, 0.0001)

sigma.plot ~ dunif(0,100)

tau.plot <- 1 / sigma.plot^2

sigma.block ~ dunif(0,100)

tau.block <- 1 / sigma.block^2

# priors

for(i in 1:N.plots){

eps.plot[i]~dnorm(0,tau.plot)

}

for(i in 1:N.blocks){

eps.block[i]~dnorm(0,tau.block)

}

# Likelihood

for(i in 1:N){

logit(p[i]) <- mu.alpha + eps.plot[plot[i]] + eps.block[block[i]]

y[i] ~ dbin(p[i], n[i])

}

}"

# -------------------------------------------------------------------

# Initial values

# -------------------------------------------------------------------

# set inits for rjags

inits = list(list(mu.alpha = 0,sigma.plot=2,sigma.block=2),

list(mu.alpha = 0,sigma.plot=2,sigma.block=2),

list(mu.alpha = 0,sigma.plot=2,sigma.block=2))

# set inits function for R2jags

initsFun<-function(){list(

mu.alpha=0,

sigma.plot=2,

sigma.block=2

)}

# -------------------------------------------------------------------

# Set JAGS parameters and random seed

# -------------------------------------------------------------------

# scalars that specify the

# number of iterations in the chain for adaptation

# number of iterations for burn-in

# number of samples in the final chain

n.adapt = 500

n.update = 5000

n.iterations = 1000

n.thin = 1

parsToMonitor = c("mu.alpha","sigma.plot","sigma.block","eps.plot","eps.block")

# -------------------------------------------------------------------

# Call to JAGS via rjags

# -------------------------------------------------------------------

set.seed(2)

# tuning (n.adapt)

jm = jags.model(textConnection(modelString), data = data, inits = inits,

n.chains = length(inits), n.adapt = n.adapt)

# burn-in (n.update)

update(jm, n.iterations = n.update)

# chain (n.iter)

samples.rjags = coda.samples(jm, variable.names = c(parsToMonitor), n.iter = n.iterations, thin = n.thin)

# -------------------------------------------------------------------

# Call to JAGS via R2jags

# -------------------------------------------------------------------

set.seed(2)

samples.R2jags <-jags(data=data,inits=initsFun,parameters.to.save=parsToMonitor,model.file=textConnection(modelString),

n.thin=n.thin,n.chains=length(inits),n.burnin=n.adapt,n.iter=n.iterations,DIC=T)

# -------------------------------------------------------------------

# Summarize posteriors using MCMCvis

# -------------------------------------------------------------------

sum.rjags <- MCMCvis::MCMCsummary(samples.rjags,params=c("mu.alpha","eps.plot","sigma.plot","sigma.block","eps.block"))

sum.rjags

sum.R2jags2 <- MCMCvis::MCMCsummary(samples.R2jags,params=c("mu.alpha","eps.plot","sigma.plot","sigma.block","eps.block"))

sum.R2jags2

Here is the output from an rjags fit:

mean sd 2.5% 50% 97.5% Rhat n.eff

mu.alpha 0.07858079 21.2186737 -48.99286669 -0.04046538 45.16440893 1.11 4063

eps.plot[1] -1.77570813 0.8605892 -3.45736942 -1.77762035 -0.02258692 1.00 2857

eps.plot[2] -0.37359614 0.8614370 -2.07913650 -0.37581522 1.36611635 1.00 2846

eps.plot[3] 0.43387001 0.8612820 -1.24273657 0.42332033 2.20253810 1.00 2833

eps.plot[4] 1.31279883 0.8615840 -0.38750596 1.31179143 3.06307745 1.00 2673

eps.plot[5] -1.34317034 0.8749558 -3.06843578 -1.34747145 0.44451006 1.00 2664

eps.plot[6] -0.40064738 0.8749104 -2.13233876 -0.41530587 1.37910977 1.00 2677

eps.plot[7] 0.36515253 0.8738092 -1.35364716 0.35784379 2.15597251 1.00 2692

eps.plot[8] 1.71826293 0.8765952 -0.01057452 1.70627507 3.50314147 1.00 2650

sigma.plot 1.67540914 0.6244529 0.88895789 1.53080631 3.27418094 1.01 741

sigma.block 19.54287007 26.1348353 0.14556791 6.68959552 93.21927035 1.22 94

eps.block[1] -0.55924545 21.2126905 -46.34099332 -0.24261169 48.81435107 1.11 4009

eps.block[2] 0.35658731 21.2177540 -44.65998407 0.25801739 49.31921639 1.11 4457

and here is the output from an R2jags fit:

mean sd 2.5% 50% 97.5% Rhat n.eff

mu.alpha -0.09358847 19.9972601 -45.81215297 -0.03905447 47.32288503 1.04 1785

eps.plot[1] -1.70448172 0.8954054 -3.41749845 -1.70817566 0.08187877 1.00 1141

eps.plot[2] -0.30070570 0.8940527 -2.01982416 -0.30458798 1.46954632 1.00 1125

eps.plot[3] 0.50295713 0.8932038 -1.20985348 0.50458106 2.29271214 1.01 1156

eps.plot[4] 1.37862742 0.8950657 -0.34965321 1.37627777 3.19545411 1.01 1142

eps.plot[5] -1.40421696 0.8496819 -3.10743244 -1.41880218 0.25843323 1.01 1400

eps.plot[6] -0.45810643 0.8504694 -2.16755579 -0.47087931 1.20827684 1.01 1406

eps.plot[7] 0.30319019 0.8492508 -1.39045509 0.28668886 1.96325582 1.01 1500

eps.plot[8] 1.65474420 0.8500635 -0.03632306 1.63399429 3.29585024 1.01 1395

sigma.plot 1.66375532 0.6681285 0.88231891 1.49564854 3.45544415 1.04 304

sigma.block 20.64694333 23.0418085 0.41071589 11.10308188 85.56459886 1.09 78

eps.block[1] -0.45810120 19.9981027 -46.85060339 -0.33090743 46.27709625 1.04 1795

eps.block[2] 0.58896195 19.9552211 -46.39310677 0.28183123 46.57874408 1.04 1769

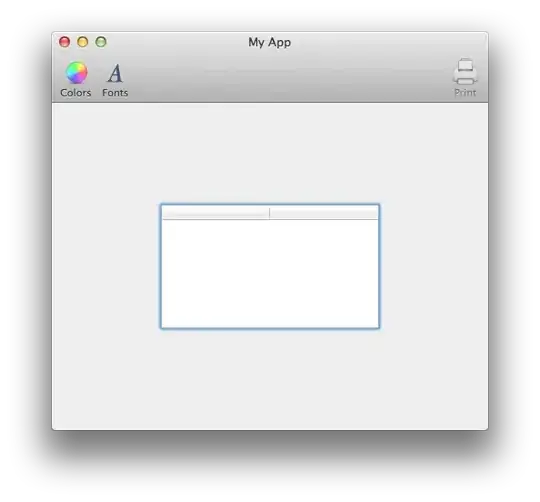

Here are trace plots for mu.alpha from the 2 fits. First, from the rjags fit:

Second, from the R2jags fit: