You may use the following solution (although it's not separating the specimen so nicely):

- Use

cv2.floodFill do replace the colored background with black background.

- Use "close" morphological operation for removing some unwanted artifacts that got left after the

floodFill.

- Threshold the result, and find the largest contour.

- Smooth the contour using the code in following post

- Build a mask from the "smoothened" contour, and apply the mask.

Here is the code:

import numpy as np

import cv2

from scipy.interpolate import splprep, splev

orig_im = cv2.imread("specimen1.jpg")

im = orig_im.copy()

h, w = im.shape[0], im.shape[1]

# Seed points for floodFill (use two points at each corner for improving robustness)

seedPoints = ((0, 0), (10, 10), (w-1, 0), (w-1, 10), (0, h-1), (10, h-1), (w-1, h-1), (w-10, h-10))

# Fill background with black color

for seed in seedPoints:

cv2.floodFill(im, None, seedPoint=seed, newVal=(0, 0, 0), loDiff=(5, 5, 5), upDiff=(5, 5, 5))

# Use "close" morphological operation

im = cv2.morphologyEx(im, cv2.MORPH_OPEN, cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (10,10)));

#Convert to Grayscale, and then to binary image.

gray = cv2.cvtColor(im, cv2.COLOR_RGB2GRAY)

ret, thresh_gray = cv2.threshold(gray, 5, 255, cv2.THRESH_BINARY)

#Find contours

_, contours, _ = cv2.findContours(thresh_gray, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

c = max(contours, key=cv2.contourArea) # Get the largest contour

# Smooth contour

# https://agniva.me/scipy/2016/10/25/contour-smoothing.html

x,y = c.T

x = x.tolist()[0]

y = y.tolist()[0]

tck, u = splprep([x,y], u=None, s=1.0, per=1)

u_new = np.linspace(u.min(), u.max(), 20)

x_new, y_new = splev(u_new, tck, der=0)

res_array = [[[int(i[0]), int(i[1])]] for i in zip(x_new,y_new)]

smoothened = np.asarray(res_array, dtype=np.int32)

# For testing

test_im = orig_im.copy()

cv2.drawContours(test_im, [smoothened], 0, (0, 255, 0), 1)

# Build a mask

mask = np.zeros_like(thresh_gray)

cv2.drawContours(mask, [smoothened], -1, 255, -1)

# Apply mask

res = np.zeros_like(orig_im)

res[(mask > 0)] = orig_im[(mask > 0)]

# Show images for testing

cv2.imshow('test_im', test_im)

cv2.imshow('res', res)

cv2.imshow('mask', mask)

cv2.waitKey(0)

cv2.destroyAllWindows()

Remark:

I don't think the solution is very robust.

You may need to use iterative approaches (like gradually increasing loDiff and hiDiff parameters for matching the best parameters for a given image).

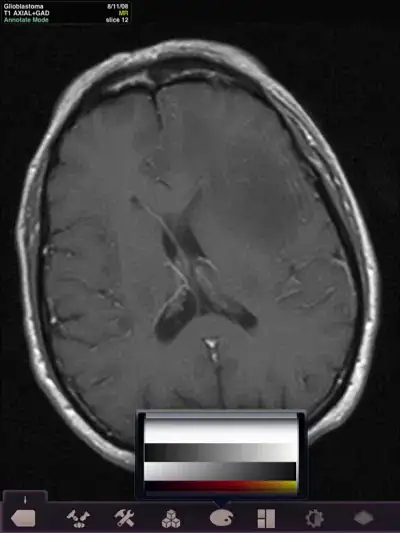

Results:

First specimen:

test_im:

mask:

res:

Second specimen:

test_im:

mask:

res:

Third specimen:

res: