In my Android app, I am capturing a screenshot programmatically from a background service. I obtain it as a Bitmap.

Next, I obtain the co-ordinates of a region of interest (ROI) with the following Android framework API:

Rect ROI = new Rect();

viewNode.getBoundsInScreen(ROI);

Here, getBoundsInScreen() is the Android equivalent of the Javascript function getBoundingClientRect().

A Rect in Android has the following properties:

rect.top

rect.left

rect.right

rect.bottom

rect.height()

rect.width()

rect.centerX() /* rounded off to integer */

rect.centerY()

rect.exactCenterX() /* exact value in float */

rect.exactCenterY()

What does top, left, right and bottom mean in Android Rect object

Whereas a Rect in OpenCV has the following properties

rect.width

rect.height

rect.x /* x coordinate of the top-left corner */

rect.y /* y coordinate of the top-left corner */

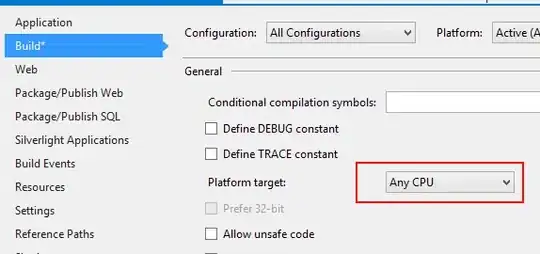

Now before we can perform any OpenCV-related operations, we need to transform the Android Rect to an OpenCV Rect.

Understanding how actually drawRect or drawing coordinates work in Android

There are two ways to convert an Android Rect to an OpenCV Rect (as suggested by Karl Phillip in his answer). Both generate the same values and both produce the same result:

/* Compute the top-left corner using the center point of the rectangle. */

int x = androidRect.centerX() - (androidRect.width() / 2);

int y = androidRect.centerY() - (androidRect.height() / 2);

// OR simply use the already available member variables:

x = androidRect.left;

y = androidRect.top;

int w = androidRect.width();

int h = androidRect.height();

org.opencv.core.Rect roi = new org.opencv.core.Rect(x, y, w, h);

Now one of the OpenCV operations I am performing is blurring the ROI within the screenshot:

Mat originalMat = new Mat();

Bitmap configuredBitmap32 = originalBitmap.copy(Bitmap.Config.ARGB_8888, true);

Utils.bitmapToMat(configuredBitmap32, originalMat);

Mat ROIMat = originalMat.submat(roi).clone();

Imgproc.GaussianBlur(ROIMat, ROIMat, new org.opencv.core.Size(0, 0), 5, 5);

ROIMat.copyTo(originalMat.submat(roi));

Bitmap blurredBitmap = Bitmap.createBitmap(originalMat.cols(), originalMat.rows(), Bitmap.Config.ARGB_8888);

Utils.matToBitmap(originalMat, blurredBitmap);

This brings us very close to the desired result. Almost there, but not quite. The area just BENEATH the targeted region is blurred.

For example, if the targeted region of interest is a password field, the above code produces the following results:

On the left, Microsoft Live ROI, and on the right, Pinterest ROI:

As can be seen, the area just below the ROI gets blurred.

So my question is, finally, why isn't the exact region of interest blurred?

- The co-ordinates obtained through the Android API

getBoundsInScreen()appear to be correct.- Converting an Android

Rectto an OpenCVRectalso appears to be correct. Or is it?- The code for blurring a region of interest also appears to be correct. Is there another way to do the same thing?

N.B: I've provided the actual, full-size screenshots as I am getting them. They have been scaled down by 50% to fit in this post, but other than that they are exactly as I am getting them on the Android device.