Q : "Why is running 5 to 8 in parallel at a time worse than running 4 at a time?"

Well, there are several reasons and we will start from a static, easiest observable one :

Since the silicon design ( for which they used a few hardware tricks ) does not scale beyond the 4.

So the last Amdahl's Law explained & promoted speedup from just +1 upscaled count of processors is 4 and any next +1 will not upscale the performance in that same way observed in the { 2, 3, 4 }-case :

This lstopo CPU-topology map helps to start to decode WHY ( here for 4-cores, but the logic is the same as for your 8-core silicon - run lstopo on your device to see more details in vivo ) :

┌───────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

│ Machine (31876MB) │

│ │

│ ┌────────────────────────────────────────────────────────────┐ ┌───────────────────────────┐ │

│ │ Package P#0 │ ├┤╶─┬─────┼┤╶───────┤ PCI 10ae:1F44 │ │

│ │ │ │ │ │ │

│ │ ┌────────────────────────────────────────────────────────┐ │ │ │ ┌────────────┐ ┌───────┐ │ │

│ │ │ L3 (8192KB) │ │ │ │ │ renderD128 │ │ card0 │ │ │

│ │ └────────────────────────────────────────────────────────┘ │ │ │ └────────────┘ └───────┘ │ │

│ │ │ │ │ │ │

│ │ ┌──────────────────────────┐ ┌──────────────────────────┐ │ │ │ ┌────────────┐ │ │

│ │ │ L2 (2048KB) │ │ L2 (2048KB) │ │ │ │ │ controlD64 │ │ │

│ │ └──────────────────────────┘ └──────────────────────────┘ │ │ │ └────────────┘ │ │

│ │ │ │ └───────────────────────────┘ │

│ │ ┌──────────────────────────┐ ┌──────────────────────────┐ │ │ │

│ │ │ L1i (64KB) │ │ L1i (64KB) │ │ │ ┌───────────────┐ │

│ │ └──────────────────────────┘ └──────────────────────────┘ │ ├─────┼┤╶───────┤ PCI 10bc:8268 │ │

│ │ │ │ │ │ │

│ │ ┌────────────┐┌────────────┐ ┌────────────┐┌────────────┐ │ │ │ ┌────────┐ │ │

│ │ │ L1d (16KB) ││ L1d (16KB) │ │ L1d (16KB) ││ L1d (16KB) │ │ │ │ │ enp2s0 │ │ │

│ │ └────────────┘└────────────┘ └────────────┘└────────────┘ │ │ │ └────────┘ │ │

│ │ │ │ └───────────────┘ │

│ │ ┌────────────┐┌────────────┐ ┌────────────┐┌────────────┐ │ │ │

│ │ │ Core P#0 ││ Core P#1 │ │ Core P#2 ││ Core P#3 │ │ │ ┌──────────────────┐ │

│ │ │ ││ │ │ ││ │ │ ├─────┤ PCI 1002:4790 │ │

│ │ │ ┌────────┐ ││ ┌────────┐ │ │ ┌────────┐ ││ ┌────────┐ │ │ │ │ │ │

│ │ │ │ PU P#0 │ ││ │ PU P#1 │ │ │ │ PU P#2 │ ││ │ PU P#3 │ │ │ │ │ ┌─────┐ ┌─────┐ │ │

│ │ │ └────────┘ ││ └────────┘ │ │ └────────┘ ││ └────────┘ │ │ │ │ │ sr0 │ │ sda │ │ │

│ │ └────────────┘└────────────┘ └────────────┘└────────────┘ │ │ │ └─────┘ └─────┘ │ │

│ └────────────────────────────────────────────────────────────┘ │ └──────────────────┘ │

│ │ │

│ │ ┌───────────────┐ │

│ └─────┤ PCI 1002:479c │ │

│ └───────────────┘ │

└───────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘

A closer look, like the one from a call to hwloc-tool: lstopo-no-graphics -.ascii, shows where mutual processing independence ends - here at a level of shared L1-instruction-cache ( the L3 one is shared either, yet at the top of the hierarchy and at such a size that bothers for large problems solvers only, not our case )

Next comes a worse observable reason WHY even worse on 8-processes :

Q : "Why does running 8 in parallel not twice as fast as running 4 in parallel i.e. why is it not ~3.5s?"

Because of thermal management.

The more work is loaded onto CPU-cores, the more heat is produced from driving electrons on ~3.5+ GHz through the silicon maze. Thermal constraints are those, that prevent any further performance boost in CPU computing powers, simply because of the Laws of physics, as we know them, do not permit to grow beyond some material-defined limits.

So what comes next?

The CPU-design has circumvented not the physics ( that is impossible ), but us, the users - by promising us a CPU chip having ~3.5+ GHz ( but in fact, the CPU can use this clock-rate only for small amounts of time - until the dissipated heat does not get the silicon close to the thermal-limits - and then, the CPU will decide to either reduce its own clock-rate as an overheating defensive step ( this reduces the performance, doesn't it? ) or some CPU-micro-architectures may hop ( move a flow of processing ) onto another, free, thus cooler, CPU-core ( which keeps a promise of higher clock-rate there ( at least for some small amount of time ) yet also reduces the performance, as the hop does not occur in zero-time and does not happen at zero-costs ( cache-losses, re-fetches etc )

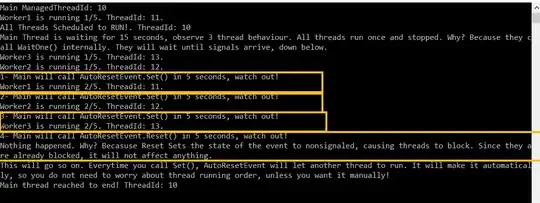

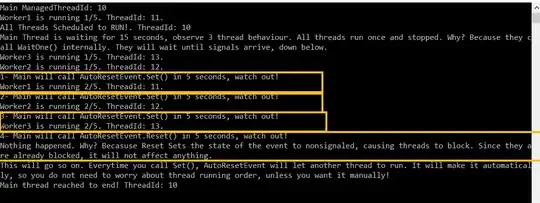

This picture shows a snapshot of the case of core-hopping - cores 0-19 got too hot and are under the Thermal Throttling cap, while cores 20-39 can ( at least for now ) run at full speed:

The Result?

Both the thermal-constraints ( diving CPU into a pool of liquid nitrogen was demonstrated for a "popular" magazine show, yet is not a reasonable option for any sustainable computing, as the mechanical stress from going from deep frozen state into a 6+ GHz clock-rate steam-forming super-heater cracks the body of the CPU and will result in CPU-death from cracks and mechanical fatigue in but a few workload episodes - so a no-go zone, due to negative ROI for any serious project ).

Good cooling and right-sizing of the pool-of-workers, based on in-vivo pre-testing is the only sure bet here.

Other architecture :