def show3D(searcher, grid_param_1, grid_param_2, name_param_1, name_param_2, rot=0):

scores_mean = searcher.cv_results_['mean_test_score']

scores_mean = np.array(scores_mean).reshape(len(grid_param_2), len(grid_param_1))

scores_sd = searcher.cv_results_['std_test_score']

scores_sd = np.array(scores_sd).reshape(len(grid_param_2), len(grid_param_1))

print('Best params = {}'.format(searcher.best_params_))

print('Best score = {}'.format(scores_mean.max()))

_, ax = plt.subplots(1,1)

# Param1 is the X-axis, Param 2 is represented as a different curve (color line)

for idx, val in enumerate(grid_param_2):

ax.plot(grid_param_1, scores_mean[idx, :], '-o', label=name_param_2 + ': ' + str(val))

ax.tick_params(axis='x', rotation=rot)

ax.set_title('Grid Search Scores')

ax.set_xlabel(name_param_1)

ax.set_ylabel('CV score')

ax.legend(loc='best')

ax.grid('on')

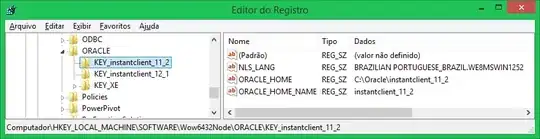

from sklearn.linear_model import SGDClassifier

metrics = ['hinge', 'log', 'modified_huber', 'perceptron', 'huber', 'epsilon_insensitive']

penalty = ['l2', 'l1', 'elasticnet']

searcher = GridSearchCV(SGDClassifier(max_iter=10000), {'loss': metrics,

'penalty': penalty},

scoring='roc_auc')

searcher.fit(train_x, train_y)

show3D(searcher, metrics, penalty, 'loss', 'penalty', 80)

searcher.cv_results_['mean_test_score']

The graph shows that the optimal value is huber + l2, however best_params gives a different result, how can this be? The plotting seems to be right, took from here: How to graph grid scores from GridSearchCV?