I figured out a way that is less dependent on a threshold.

here is a summary of the method:

- use the Sobel operator on the image and get I_x and I_y (derivative of greyscale image w.r.t the axis)

- sum I_x along the y axis and I_y along the x axis

- for each list of sums, find the minimum and maximum. those should be the margins

- preform validity checks to decide whether to actually output the indices you found in step 3

- crop the image using the indices you found.

If we calculate the derivatives of the greyscale image with respect to the horizontal and vertical changes, we should expect a spike where the margin meets the image. I used the sobel operator in order to get an approximation. and got the following images:

output for sobel operator for the image

Note how bright is the transition between the white part of the flag and the black boarder in the output I_y as it represents the steepest change in greyscale.

Next, I summed each column of I_x and each row of I_y.

average per column of I_x and per row of I_y

This is a sum of a directed derivative so the spikes are signed. This is actually helpful, as we can just take the maximum as the left/top margin and the minimum as the right/bottom one.

the final image is this:

cropped image

note that this image has a black margin at all sides (even a 1 pixel margin at the right side, which is hard to notice)

what about images with only partial margins?

This is where this method is still dependent on some threshold, but it is a statistical one. The max/min of the graph is taken as the margin only if it is at least 3 standard deviations larger than the mean (why 3? because of 'the empirical rule' and because it works for most images I tried it with)

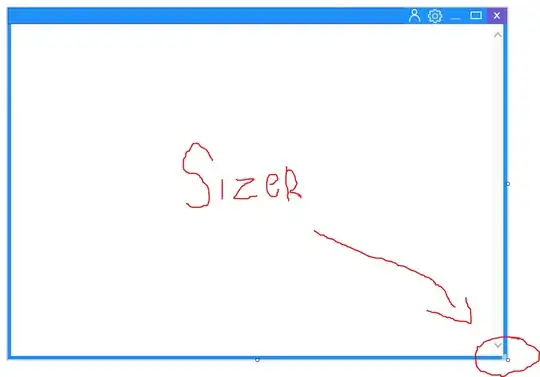

for example, if we take this image:

margins on top only

we get graphs with only one point being 3 standard diviations away from the mean.

average per column of I_x and per row of I_y for partly cropped image

Thus the image is cropped correctly:

cropped image

Note that this method might not work as well when there are "fake margins" that you don't want to be removed.

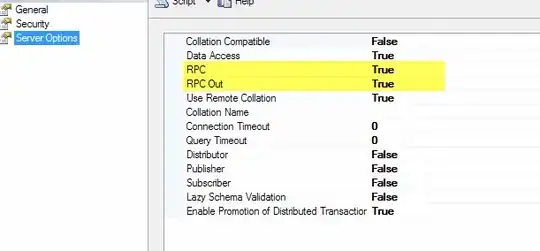

here is the code I used when analyzing this image:

import cv2

import numpy as np

def remove_black_border_by_sobel(image, std_factor=3):

image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

sobelx = cv2.Sobel(image_gray, cv2.CV_64F, 1, 0, ksize=5)

sobely = cv2.Sobel(image_gray, cv2.CV_64F, 0, 1, ksize=5)

fig, ax = plt.subplots(1, 2)

ax[0].imshow(sobelx, cmap='cool')

ax[1].imshow(sobely, cmap='cool')

ax[0].set_title('dirivative in x direction')

ax[1].set_title('dirivative in y direction')

plt.show()

plt.close()

x_values = np.average(sobelx, axis=0)

y_values = np.average(sobely, axis=1)

fig, ax = plt.subplots(1, 2)

ax[0].plot(x_values)

ax[0].set_title('average I_x')

ax[1].plot(y_values)

ax[1].set_title('average I_y')

ax[0].set_xlabel('x')

ax[0].set_ylabel('average dirivative')

ax[1].set_xlabel('y')

plt.show()

plt.close()

x_mean = np.mean(x_values)

x_max_index = np.argmax(x_values)

x_min_index = np.argmin(x_values)

y_mean = np.mean(y_values)

y_max_index = np.argmax(y_values)

y_min_index = np.argmin(y_values)

# check if the minimum value is very low

if x_values[x_min_index] < x_mean - std_factor * np.std(x_values):

x_right = x_min_index

else:

x_right = image_gray.shape[1]

# check if the maximum value is very high

if x_values[x_max_index] > x_mean + std_factor * np.std(x_values):

x_left = x_max_index

else:

x_left = 0

# check if the minimum value is very low

if y_values[y_min_index] < y_mean - std_factor * np.std(y_values):

y_bottom = y_min_index

else:

y_bottom = image_gray.shape[0]

# check if the maximum value is very high

if y_values[y_max_index] > y_mean + std_factor * np.std(y_values):

y_top = y_max_index

else:

y_top = 0

print(x_left, x_right, y_top, y_bottom)

# check if the values are valid

if x_left > x_right:

x_left, x_right = 0, image_gray.shape[1]

if y_top > y_bottom:

y_top, y_bottom = 0, image_gray.shape[0]

print(x_left, x_right, y_top, y_bottom)

return image[y_top:y_bottom, x_left:x_right]