I want to detect text on x-ray images. The goal is to extract the oriented bounding boxes as a matrix where each row is a detected bounding box and each row contains the coordinates of all four edges i.e. [x1, x2, y1, y2]. I'm using python 3 and OpenCV 4.2.0.

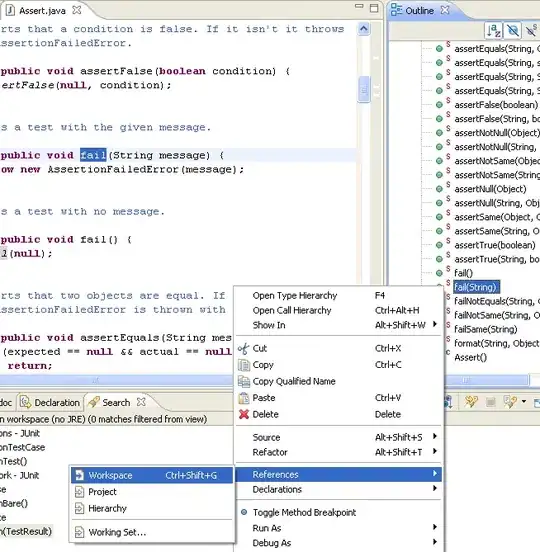

Here is a sample image:

The string "test word", "a" and "b" should be detected.

I followed this OpenCV tutorial about creating rotated boxes for contours and this stackoverflow answer about detecting a text area in an image.

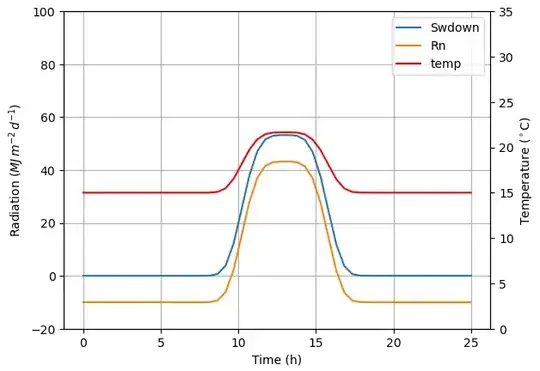

The resulting boundary boxes should look something like this:

I was able to detect the text, but the result included a lot of boxes without text.

Here is what I tried so far:

img = cv2.imread(file_name)

## Open the image, convert it into grayscale and blur it to get rid of the noise.

img2gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

ret, mask = cv2.threshold(img2gray, 180, 255, cv2.THRESH_BINARY)

image_final = cv2.bitwise_and(img2gray, img2gray, mask=mask)

ret, new_img = cv2.threshold(image_final, 180, 255, cv2.THRESH_BINARY) # for black text , cv.THRESH_BINARY_INV

kernel = cv2.getStructuringElement(cv2.MORPH_CROSS, (3, 3))

dilated = cv2.dilate(new_img, kernel, iterations=6)

canny_output = cv2.Canny(dilated, 100, 100 * 2)

cv2.imshow('Canny', canny_output)

## Finds contours and saves them to the vectors contour and hierarchy.

contours, hierarchy = cv2.findContours(canny_output, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

# Find the rotated rectangles and ellipses for each contour

minRect = [None] * len(contours)

for i, c in enumerate(contours):

minRect[i] = cv2.minAreaRect(c)

# Draw contours + rotated rects + ellipses

drawing = np.zeros((canny_output.shape[0], canny_output.shape[1], 3), dtype=np.uint8)

for i, c in enumerate(contours):

color = (255, 0, 255)

# contour

cv2.drawContours(drawing, contours, i, color)

# rotated rectangle

box = cv2.boxPoints(minRect[i])

box = np.intp(box) # np.intp: Integer used for indexing (same as C ssize_t; normally either int32 or int64)

cv2.drawContours(img, [box], 0, color)

cv2.imshow('Result', img)

cv2.waitKey()

Do I need to run the results through OCR to make sure whether it is text or not? What other approaches should I try?

PS: I'm quite new to computer vision and not familiar with most concepts yet.