I am trying to create a loop of sequence for 30 imported excel files in the folder.

In the code below I am getting paths from folders and loading as a list. Then am creating from individual file a data frame because I need to manipulate each of them. Like clean several rows and keep only columns I need.

Is it possible in R to do it without creating individual data frames and clean each sheet right away with loop or for? I was trying to do that but no luck at all so I am sure am missing something.

PS:

What if there are also excel files that do not have exact format as previous excel files and I need to slice more rows then from previous files. Can I still use the same loop or do I need to do it in different tasks?

I can provide more information if this is not enoguh.

This is the code I have for now:

library(readxl)

library(purrr)

library(tidyverse)

library(janitor)

library(stringr)

library(plyr)

#### get list of paths to our .xlsx files ---- make sure to have full.names TRUE - appends path directory -----

match_xlsx <- as_tibble(list.files("C:/Users/User/Desktop/astn", pattern = ".xlsx", full.names = TRUE, all.files = FALSE))

#### read specific sheet from excel ----

match_list <- lapply(match_xlsx$value, read_xlsx, sheet = "Overview")

#### create individual dataframes ----

for (i in seq(match_list))

assign(paste0("match", i), match_list[[i]])

#### create individual dataframes ----

for (i in seq(match_list))

assign(paste0("match", i), match_list[[i]])

ruzomberok <- match1 %>%

janitor::clean_names() %>%

select(fortuna_liga_2_kolo_as_tn_ruzomberok_27_7_2019:abdul_zubairu) %>%

slice(2:n()) %>% # remove row 1 until 3

slice(1:16) %>% # select only the row 1 to 16

dplyr::rename(sport_item = fortuna_liga_2_kolo_as_tn_ruzomberok_27_7_2019,

uom = x2)

#### Senica ----

senica <- match2 %>%

janitor::clean_names() %>%

select(fortuna_liga_senica_as_trencin_1_kolo_20_7_2019:abdul_zubairu) %>%

slice(4:n()) %>% # remove row 1 until 3

slice(1:16) %>% # select only the row 1 to 16

dplyr:: rename(sport_item=

fortuna_liga_senica_as_trencin_1_kolo_20_7_2019,

uom = x2)

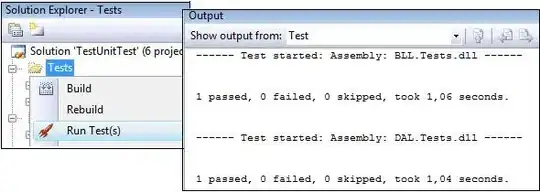

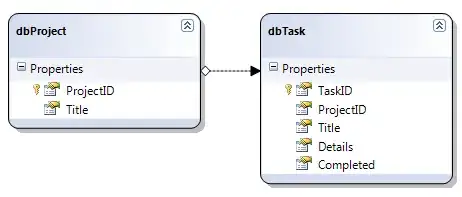

On the images below are two examples of files. What I need is to remove red squares on the image (particular rows) and keep only the purple. However, on the 2nd image, it is a difference of 1 more row. Does this helps more?