The following code finds the best-focus image within a set most of the time, but there are some images where it returns a higher value for the image that is way more blurry to my eye.

I am using OpenCV 3.4.2 on Linux and/or Mac.

import org.opencv.core.*;

import org.opencv.imgproc.Imgproc;

import static org.opencv.core.Core.BORDER_DEFAULT;

public class LaplacianExample {

public static Double calcSharpnessScore(Mat srcImage) {

/// Remove noise with a Gaussian filter

Mat filteredImage = new Mat();

Imgproc.GaussianBlur(srcImage, filteredImage, new Size(3, 3), 0, 0, BORDER_DEFAULT);

int kernel_size = 3;

int scale = 1;

int delta = 0;

Mat lplImage = new Mat();

Imgproc.Laplacian(filteredImage, lplImage, CvType.CV_64F, kernel_size, scale, delta, Core.BORDER_DEFAULT);

// converting back to CV_8U generate the standard deviation

Mat absLplImage = new Mat();

Core.convertScaleAbs(lplImage, absLplImage);

// get the standard deviation of the absolute image as input for the sharpness score

MatOfDouble median = new MatOfDouble();

MatOfDouble std = new MatOfDouble();

Core.meanStdDev(absLplImage, median, std);

return Math.pow(std.get(0, 0)[0], 2);

}

}

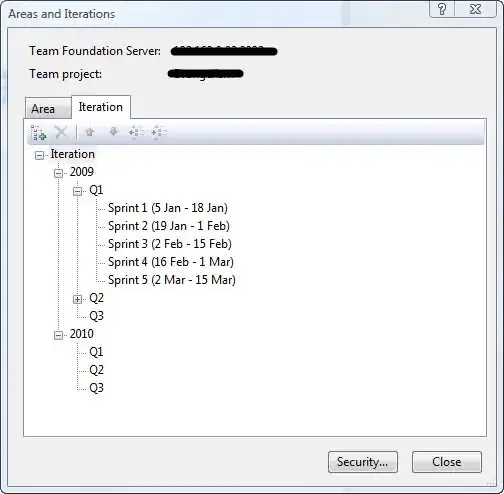

Here are two images using the same illumination (fluorescence, DAPI), taken from below a microscope slide while attempting to auto-focus on the coating/mask on the top surface of the slide.

I'm hoping someone can explain to me why my algorithm fails to detect the image that is less blurry. Thanks!