is there a way in python to generate random data based on the distribution of the alreday existing data?

Here are the statistical parameters of my dataset:

Data

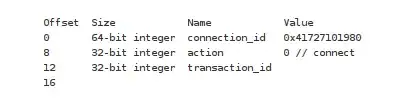

count 209.000000

mean 1.280144

std 0.374602

min 0.880000

25% 1.060000

50% 1.150000

75% 1.400000

max 4.140000

as it is no normal distribution it is not possible to do it with np.random.normal. Any Ideas?

Thank you.

Edit: Performing KDE:

from sklearn.neighbors import KernelDensity

# Gaussian KDE

kde = KernelDensity(kernel='gaussian', bandwidth=0.525566).fit(data['y'].to_numpy().reshape(-1, 1))

sns.distplot(kde.sample(2400))