I recently carried out a couple of tests on a Linux server with 2 processors each featuring 20 physical cores (full hardware description of one processor is given below) along with 20 additional logical cores (thus 80 cores in total). The reason for this investigation is that I work in a research lab where most of the codes are written in Python and I have found many online posts related to Python performance variations from a computer to another.

Here is my setup:

- operating system: CentOS 7.7.1908

- two python versions are compared: Python 3.6.8 and Python Intel 3.6.9 (2019 update 5)

- processor: Intel(R) Xeon(R) CPU E5-2698 v4 @ 2.20GHz

I ran comparisons for various basic functions of both numpy and scipy, specifically:

scipy.sparse.linal.spsolve: solution of a linear system (Ax=b) with A a 68000x68000 sparse matrix and x a 68000x50 sparse matrix,scipy.sparse.linalg.eigsh: solution of a generalized eigenvalue problems with 68000x68000 sparse matrices,numpy.dotscipy.linalg.choleskyandscipy.linalg.svd

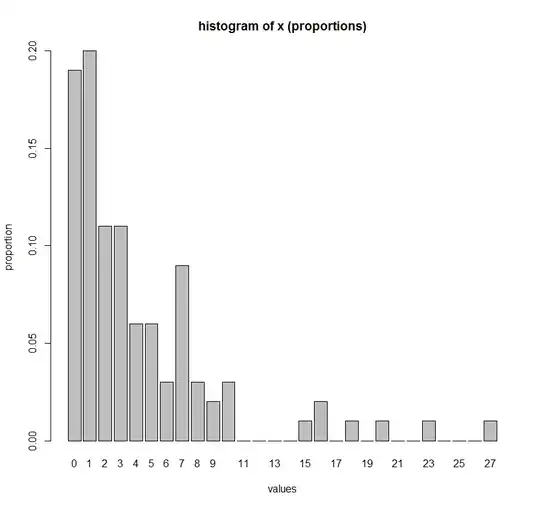

Basically, I decided to run test scripts (each being run between 25 and 100 times using timeit in order to obtain relevant results) for each version of Python considering the default execution of the test script and its execution using a varying number (x-1) of cores by means of the command taskset --cpu-list 0-x ....

Here is a brief summary of my results:

scipy.sparse.linal.spsolve

scipy.sparse.linalg.eigsh

numpy.dot

scipy.linalg.cholesky

scipy.linalg.svd

I should add that black dots and dashed lines indicate the default execution time, without using the taskset command.

As expected, Python Intel performs better than Python 3. What surprises me greatly however is how the default execution of a code with Python Intel may be slower than its execution on a limited (3 to 5) number of cores (particularly for spsolve and eigsh).

Is this normal (I am guessing there is a balance to find between computation time and communication time between cores) ? And is there any way to optimize the default execution of a Python code on a multi-core processor ?

Here are the specs of one of the cores of my server:

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 79

model name : Intel(R) Xeon(R) CPU E5-2698 v4 @ 2.20GHz

stepping : 1

microcode : 0xb00002e

cpu MHz : 1207.958

cache size : 51200 KB

physical id : 0

siblings : 20

core id : 0

cpu cores : 20

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 20

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch epb cat_l3 cdp_l3 invpcid_single intel_ppin intel_pt ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm rdt_a rdseed adx smap xsaveopt cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts spec_ctrl intel_stibp flush_l1d

bogomips : 4389.92

clflush size : 64

cache_alignment : 64

address sizes : 46 bits physical, 48 bits virtual

power management:

Also, here are the codes that I used: (1) for spsolve:

#!/usr/bin/env python

import timeit

setup = '''

import numpy

import sys

import timeit

import scipy.io

from scipy.sparse.linalg import spsolve

from scipy import sparse

K=scipy.io.loadmat('K0.mat', struct_as_record=False,squeeze_me=True)

K = K.tocsc()

def problin(K):

MS = numpy.zeros([numpy.shape(K)[0],50])

for i in range(50):

MS[i,i]=1;

MS = sparse.csc_matrix(MS)

#

x = spsolve(-K,MS)

return x

'''

code = '''

x = problin(K)

'''

count = 5

t = timeit.Timer(code,setup = setup)

print("spsolve:", t.timeit(count)/count, "sec")

(2) for eigsh:

#!/usr/bin/env python

import timeit

setup = '''

import numpy

import sys

import scipy.io

from scipy.sparse.linalg import eigsh

K=scipy.io.loadmat('K0.mat', struct_as_record=False,squeeze_me=True)

M=scipy.io.loadmat('M0.mat', struct_as_record=False,squeeze_me=True)

K = K['K'].tocsc()

M = M['M'].tocsc()

def vp(K,M): # Function is compiled to machine code when called the first time

w, z = eigsh(K,10,M,sigma=1)

f = numpy.sqrt(w)/2/numpy.pi

return f

'''

code = '''

f = vp(K,M)

'''

count = 5

t = timeit.Timer(code,setup = setup)

print("eigsh:", t.timeit(count)/count, "sec")

(3) for cholesky and SVD, I found the scripts online:

#!/usr/bin/env python

import timeit

setup = "import numpy;\

import scipy.linalg as linalg;\

x = numpy.random.random((1000,1000));\

z = numpy.dot(x, x.T)"

count = 25

t = timeit.Timer("linalg.cholesky(z, lower=True)", setup=setup)

print("cholesky:", t.timeit(count)/count, "sec")

t = timeit.Timer("linalg.svd(z)", setup=setup)

print("svd:", t.timeit(count)/count, "sec")

(4) dot:

#!/usr/bin/env python

import numpy

from numpy.distutils.system_info import get_info

import sys

import timeit

print("version: %s" % numpy.__version__)

print("maxint: %i\n" % sys.maxsize)

info = get_info('blas_opt')

print('BLAS info:')

for kk, vv in info.items():

print(' * ' + kk + ' ' + str(vv))

setup = "import numpy; x = numpy.random.random((2000, 2000))"

count = 100

t = timeit.Timer("numpy.dot(x, x.T)", setup=setup)

print("\ndot: %f sec" % (t.timeit(count) / count))