I'm following the ROC graph example given in sklearn docs here (you can download a Jupyter notebook from here). It generates a ROC graph for the multi-class problem over the Iris dataset.

In the original example, the predictions are generated using the SVM classifier's decision_function method, which generate this graph:

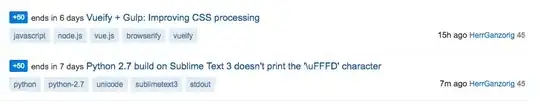

When I change it to generate predictions using predict_proba, the ROC graph changes dramatically to (mostly in class 1):

I do not understand why this happens. Prediction probabilities are determined by the decision function, so how come there's such a huge change in class 1?

EDIT: The change is:

classifier.fit(X_train, y_train).decision_function(X_test) becomes y_score = classifier.fit(X_train, y_train).predict_proba(X_test)

EDIT 2: Full code I'm running -

print(__doc__)

import numpy as np

import matplotlib.pyplot as plt

from itertools import cycle

from sklearn import svm, datasets

from sklearn.metrics import roc_curve, auc

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import label_binarize

from sklearn.multiclass import OneVsRestClassifier

from scipy import interp

from sklearn.metrics import roc_auc_score

# Import some data to play with

iris = datasets.load_iris()

X = iris.data

y = iris.target

# Binarize the output

y = label_binarize(y, classes=[0, 1, 2])

n_classes = y.shape[1]

# Add noisy features to make the problem harder

random_state = np.random.RandomState(0)

n_samples, n_features = X.shape

X = np.c_[X, random_state.randn(n_samples, 200 * n_features)]

# shuffle and split training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=.5,

random_state=0)

# Learn to predict each class against the other

classifier = OneVsRestClassifier(svm.SVC(kernel='linear', probability=True,

random_state=random_state))

y_score = classifier.fit(X_train, y_train).predict_proba(X_test) # Here's my change

# Compute ROC curve and ROC area for each class

fpr = dict()

tpr = dict()

roc_auc = dict()

for i in range(n_classes):

fpr[i], tpr[i], _ = roc_curve(y_test[:, i], y_score[:, i])

roc_auc[i] = auc(fpr[i], tpr[i])

# Compute micro-average ROC curve and ROC area

fpr["micro"], tpr["micro"], _ = roc_curve(y_test.ravel(), y_score.ravel())

roc_auc["micro"] = auc(fpr["micro"], tpr["micro"])

plt.figure()

lw = 2

plt.plot(fpr[2], tpr[2], color='darkorange',

lw=lw, label='ROC curve (area = %0.2f)' % roc_auc[2])

plt.plot([0, 1], [0, 1], color='navy', lw=lw, linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Receiver operating characteristic example')

plt.legend(loc="lower right")

plt.show()

# First aggregate all false positive rates

all_fpr = np.unique(np.concatenate([fpr[i] for i in range(n_classes)]))

# Then interpolate all ROC curves at this points

mean_tpr = np.zeros_like(all_fpr)

for i in range(n_classes):

mean_tpr += interp(all_fpr, fpr[i], tpr[i])

# Finally average it and compute AUC

mean_tpr /= n_classes

fpr["macro"] = all_fpr

tpr["macro"] = mean_tpr

roc_auc["macro"] = auc(fpr["macro"], tpr["macro"])

# Plot all ROC curves

plt.figure()

plt.plot(fpr["micro"], tpr["micro"],

label='micro-average ROC curve (area = {0:0.2f})'

''.format(roc_auc["micro"]),

color='deeppink', linestyle=':', linewidth=4)

plt.plot(fpr["macro"], tpr["macro"],

label='macro-average ROC curve (area = {0:0.2f})'

''.format(roc_auc["macro"]),

color='navy', linestyle=':', linewidth=4)

colors = cycle(['aqua', 'darkorange', 'cornflowerblue'])

for i, color in zip(range(n_classes), colors):

plt.plot(fpr[i], tpr[i], color=color, lw=lw,

label='ROC curve of class {0} (area = {1:0.2f})'

''.format(i, roc_auc[i]))

plt.plot([0, 1], [0, 1], 'k--', lw=lw)

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Some extension of Receiver operating characteristic to multi-class')

plt.legend(loc="lower right")

plt.show()

y_prob = classifier.predict_proba(X_test)

macro_roc_auc_ovo = roc_auc_score(y_test, y_prob, multi_class="ovo",

average="macro")

weighted_roc_auc_ovo = roc_auc_score(y_test, y_prob, multi_class="ovo",

average="weighted")

macro_roc_auc_ovr = roc_auc_score(y_test, y_prob, multi_class="ovr",

average="macro")

weighted_roc_auc_ovr = roc_auc_score(y_test, y_prob, multi_class="ovr",

average="weighted")

print("One-vs-One ROC AUC scores:\n{:.6f} (macro),\n{:.6f} "

"(weighted by prevalence)"

.format(macro_roc_auc_ovo, weighted_roc_auc_ovo))

print("One-vs-Rest ROC AUC scores:\n{:.6f} (macro),\n{:.6f} "

"(weighted by prevalence)"

.format(macro_roc_auc_ovr, weighted_roc_auc_ovr))