If you rephrase the return in fastSin as

return (1-P) * y + P * (y * abs(y))

And rewrite y as (for x>0 )

y = 4 * x * (pi-x) / (pi * pi)

you can see that y is a parabolic first-order approximation to sin(x) chosen so that it passes through (0,0), (pi/2,1) and (pi,0), and is symmetrical about x=pi/2.

Thus we can only expect our function to be a good approximation from 0 to pi. If we want values outside that range we can use the 2-pi periodicity of sin(x) and that sin(x+pi) = -sin(x).

The y*abs(y) is a "correction term" which also passes through those three points. (I'm not sure why y*abs(y) is used rather than just y*y since y is positive in the 0-pi range).

This form of overall approximation function guarantees that a linear blend of the two functions y and y*y, (1-P)*y + P * y*y will also pass through (0,0), (pi/2,1) and (pi,0).

We might expect y to be a decent approximation to sin(x), but the hope is that by picking a good value for P we get a better approximation.

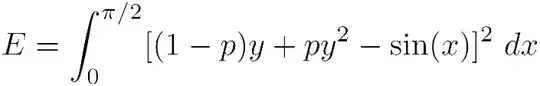

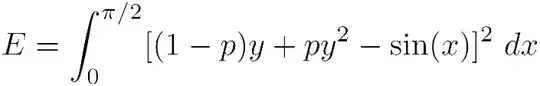

One question is "How was P chosen?". Personally, I'd chose the P that produced the least RMS error over the 0,pi/2 interval. (I'm not sure that's how this P was chosen though)

Minimizing this wrt. P gives

This can be rearranged and solved for p

Wolfram alpha evaluates the initial integral to be the quadratic

E = (16 π^5 p^2 - (96 π^5 + 100800 π^2 - 967680)p + 651 π^5 - 20160 π^2)/(1260 π^4)

which has a minimum of

min(E) = -11612160/π^9 + 2419200/π^7 - 126000/π^5 - 2304/π^4 + 224/π^2 + (169 π)/420

≈ 5.582129689596371e-07

at

p = 3 + 30240/π^5 - 3150/π^3

≈ 0.2248391013559825

Which is pretty close to the specified P=0.225.

You can raise the accuracy of the approximation by adding an additional correction term. giving a form something like return (1-a-b)*y + a y * abs(y) + b y * y * abs(y). I would find a and b by in the same way as above, this time giving a system of two linear equations in a and b to solve, rather than a single equation in p. I'm not going to do the derivation as it is tedious and the conversion to latex images is painful... ;)

NOTE: When answering another question I thought of another valid choice for P.

The problem is that using reflection to extend the curve into (-pi,0) leaves a kink in the curve at x=0. However, I suspect we can choose P such that the kink becomes smooth.

To do this take the left and right derivatives at x=0 and ensure they are equal. This gives an equation for P.