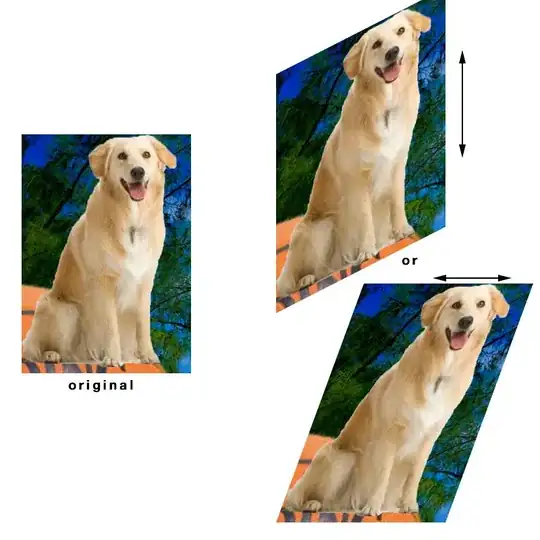

I have 40GB of csv files. After reading them, I need to perform a series of transformations. One of them is to explode a column. After that transformation I get the shuffle spill depicted below. I understand why that is the case. The explosion is based on a broadcast variable lookup which gives a very skewed set of results.

My question is - how can I mitigate the spill? I tried repartitioning before the explode function by tuning the spark.sql.shuffle.partitions configuration parameter in order to ensure that the shuffle partitions are of equal size but that didn't help.

Any suggestions or literature on the topic would be greatly appreciated!