I'm developing a video editing app for iOS on my spare time.

I just resumed work on it after several weeks of attending other rpojects, and -even though I haven't made any significant changes to the code- now it crashes everytime I try to export my video composition.

I checked out and built the exact same commit that I successfully uploaded to TestFlight back then (and it was working on several devices without crashing), so perhaps it is an issue with the latest Xcode / iOS SDK that I hve updated since then?

The code crashes on _xpc_api_misuse, on a thread:

com.apple.coremedia.basicvideocompositor.output

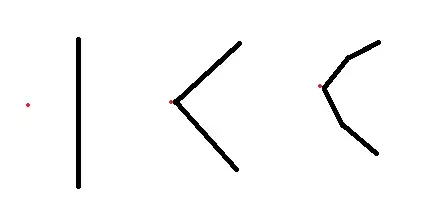

Debug Navigator:

At the time of the crash, there are 70+ threads on the debug navigator, so perhaps something is wrong and the app is using too many threads (never seen these many).

My app overlays a 'watermark' on exported video using a text layer. After playing around, I discovered that the crash can be averted if I comment-out the watermark code:

guard let exporter = AVAssetExportSession(asset: composition, presetName: AVAssetExportPresetHighestQuality) else {

return failure(ProjectError.failedToCreateExportSession)

}

guard let documents = try? FileManager.default.url(for: .documentDirectory, in: .userDomainMask, appropriateFor: nil, create: true) else {

return failure(ProjectError.temporaryOutputDirectoryNotFound)

}

let dateFormatter = DateFormatter()

dateFormatter.dateFormat = "yyyy-MM-dd_HHmmss"

let fileName = dateFormatter.string(from: Date())

let fileExtension = "mov"

let fileURL = documents.appendingPathComponent(fileName).appendingPathExtension(fileExtension)

exporter.outputURL = fileURL

exporter.outputFileType = AVFileType.mov

exporter.shouldOptimizeForNetworkUse = true // check if needed

// OFFENDING BLOCK (commenting out averts crash)

if addWaterMark {

let frame = CGRect(origin: .zero, size: videoComposition.renderSize)

let watermark = WatermarkLayer(frame: frame)

let parentLayer = CALayer()

let videoLayer = CALayer()

parentLayer.frame = frame

videoLayer.frame = frame

parentLayer.addSublayer(videoLayer)

parentLayer.addSublayer(watermark)

videoComposition.animationTool = AVVideoCompositionCoreAnimationTool(postProcessingAsVideoLayer: videoLayer, in: parentLayer)

}

// END OF OFFENDING BLOCK

exporter.videoComposition = videoComposition

exporter.exportAsynchronously {

// etc.

The code for the watermark layer is:

class WatermarkLayer: CATextLayer {

private let defaultFontSize: CGFloat = 48

private let rightMargin: CGFloat = 10

private let bottomMargin: CGFloat = 10

init(frame: CGRect) {

super.init()

guard let appName = Bundle.main.infoDictionary?["CFBundleName"] as? String else {

fatalError("!!!")

}

self.foregroundColor = CGColor.srgb(r: 255, g: 255, b: 255, a: 0.5)

self.backgroundColor = CGColor.clear

self.string = String(format: String.watermarkFormat, appName)

self.font = CTFontCreateWithName(String.watermarkFontName as CFString, defaultFontSize, nil)

self.fontSize = defaultFontSize

self.shadowOpacity = 0.75

self.alignmentMode = .right

self.frame = frame

}

required init?(coder: NSCoder) {

fatalError("init(coder:) has not been implemented. Use init(frame:) instead.")

}

override func draw(in ctx: CGContext) {

let height = self.bounds.size.height

let fontSize = self.fontSize

let yDiff = (height-fontSize) - fontSize/10 - bottomMargin // Bottom (minus margin)

ctx.saveGState()

ctx.translateBy(x: -rightMargin, y: yDiff)

super.draw(in: ctx)

ctx.restoreGState()

}

}

Any ideas what could be happening?

Perhaps my code is doing something wrong that somewhow 'got a pass' in a previous SDK due to some Apple bug that got fixed or an implementation 'hole' that got plugged?

UPDATE: I downloaded Ray Wenderlich's sample project for video wediting and tried to add 'subtitles' to a video (I had to tweak the too-old project so that it would compile under Xcode 11).

Lo and behold, it crashes in the exact same way.

UPDATE 2: I now tried on the device (iPhone 8 running the latest iOS 13.5) and it works, no crash. The Simulators for iOS 13.5 do crash however. When I originally posted the question (iOS 13.4?), I'm sure it was both Crashing on device and Simulator.

I am downloading the iOS 12.0 Simulators to check, but it's still a few gigabytes away...