I am trying to load some data from this web page. The part of info that I want to get it´s this specific part:

I inspected the page and I see this class&id:

So I tried like this:

url = url(paste0("http://www.aemet.es/es/eltiempo/prediccion/avisos?w=mna"))

aa2 = html_nodes(read_html(url),

'div#listado-avisos.contenedor-tabla')

aa3 = data.frame(texto = str_replace_all(html_text(aa2),"[\r\n\t]" , ""),

stringsAsFactors = FALSE)

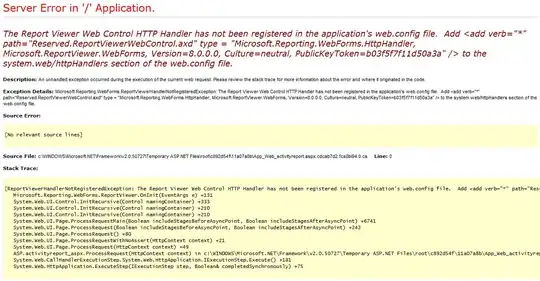

And I get a dataframe with a row without any info... What I am doing wrong?

Thanks in advance.

Updated: possible answer thanks to QHarr:

library(httr)

library(rvest)

library(jsonlite)

url = "https://www.aemet.es/es/eltiempo/prediccion/avisos?w=mna"

download.file(url, destfile = "scrapedpage.html", quiet=TRUE)

date_value <- read_html("scrapedpage.html") %>% html_node('#fecha-seleccionada-origen') %>% html_attr('value')

url2 = paste0('https://www.aemet.es/es/api-eltiempo/resumen-avisos-geojson/PB/', date_value , '/D+1')

download.file(url2, destfile = "scrapedpage2.html", quiet=TRUE)

data <- httr::GET(url = "scrapedpage2.html", httr::add_headers(.headers=headers))

avisos = jsonlite::parse_json(read_html("scrapedpage2.html") %>%

html_node('p') %>% html_text())