I'm trying to perform a isin filter as optimized as possible. Is there a way to broadcast collList using Scala API?

Edit: I'm not looking for an alternative, I know them, but I need isin so my RelationProviders will pushdown the values.

val collList = collectedDf.map(_.getAs[String]("col1")).sortWith(_ < _)

//collList.size == 200.000

val retTable = df.filter(col("col1").isin(collList: _*))

The list i'm passing to the "isin" method has upto ~200.000 unique elements.

I know this doesn't look like the best option and a join sounds better, but I need those elements pushed down into the filters, makes a huge difference when reading (my storage is Kudu, but it also applies to HDFS+Parquet, base data is too big and queries work on around 1% of that data), I already measured everything, and it saved me around 30minutes execution time :). Plus my method already takes care if the isin is larger than 200.000.

My problem is, I'm getting some Spark "task are too big" (~8mb per task) warnings, everything works fine so not a big deal, but I'm looking to remove them and also optimize.

I've tried with, which does nothing as I still get the warning (since the broadcasted var gets resolved in Scala and passed to vargargs I guess):

val collList = collectedDf.map(_.getAs[String]("col1")).sortWith(_ < _)

val retTable = df.filter(col("col1").isin(sc.broadcast(collList).value: _*))

And this one which doesn't compile:

val collList = collectedDf.map(_.getAs[String]("col1")).sortWith(_ < _)

val retTable = df.filter(col("col1").isin(sc.broadcast(collList: _*).value))

And this one which doesn't work (task too big still appears)

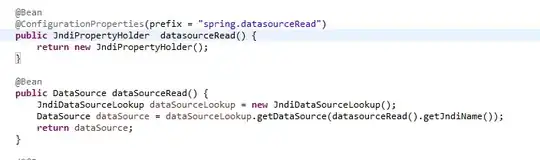

val broadcastedList=df.sparkSession.sparkContext.broadcast(collList.map(lit(_).expr))

val filterBroadcasted=In(col("col1").expr, collList.value)

val retTable = df.filter(new Column(filterBroadcasted))

Any ideas on how to broadcast this variable? (hacks allowed). Any alternative to the isin which allows filter pushdown is also valid I've seen some people doing it on PySpark, but the API is not the same.

PS: Changes on the storage are not possible, I know partitioning (already partitioned, but not by that field) and such could help, but user inputs are totally random and the data is accessed and changed my many clients.