I have this existing API running in loop which creates a connection and fetches huge json(1 GB) in each iteration from a service through restTemplate as follow:

ResponseEntity<String> response = restTemplate.exchange(uri.get().toString(), HttpMethod.POST,entity,

String.class);

The response is then converted to a complex java object through GSON. The problem with above approach is, rest template converts the inputstream to String through StringBuffer which ends up creating lots of char[] eventually running out of memory (OOM) when the loop is iterated for too long which usually is the case. In place of RestEntity, I even used HttpClient, it too does the same (expand char array).

To solve OOM issue, I refactored the API to stream the data to file. Creating a temp file in each iteration and converting to objects as follow:

File targetFile = new File("somepath\\response.tmp");

FileUtils.copyInputStreamToFile(response.getEntity().getContent(), targetFile);

List<Object> objects = gson.fromJson(reader, new TypeToken<List<Object>>(){}.getType());

is this the way to go or is there any effective approach to solve such problems? Maybe pooling connections instead of creating new in each iteration (will this be considerable change?)

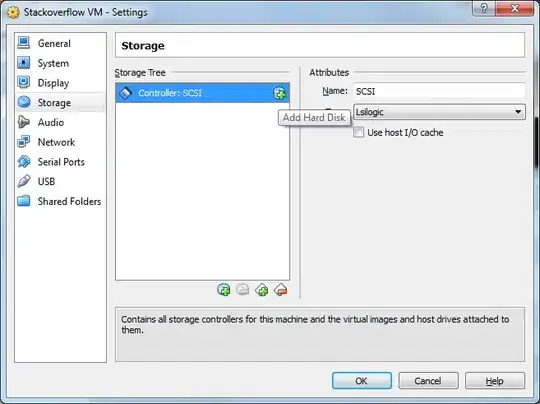

Also, on analysing the API on 4GB xmx, 2GB xms heap, jvisual shows the below:

As it can be seen, the running thread has been allocated huge bytes.

Heap size during the API runtime: