I have a mono wav file for a 'glass breaking' sound. When I graphically display it's levels in python using librosa library, it shows very large range of amplitudes, between +/ 20000 instead of +/- 1. When I open same wav file with Audacity, the levels are between +/- 1.

My question is what generates this difference in displayed amplitude levels and how can I correct it in Python? MinMax scaling will distort the sound and I want to avoid it if possible.

The code is:

from scipy.io import wavfile

fs1, glass_break_data = wavfile.read('test_break_glass_normalized.wav')

%matplotlib inline

import matplotlib.pyplot as plt

import librosa.display

sr=44100

x = glass_break_data.astype('float')

plt.figure(figsize=(14, 5))

librosa.display.waveplot(x, sr=sr)

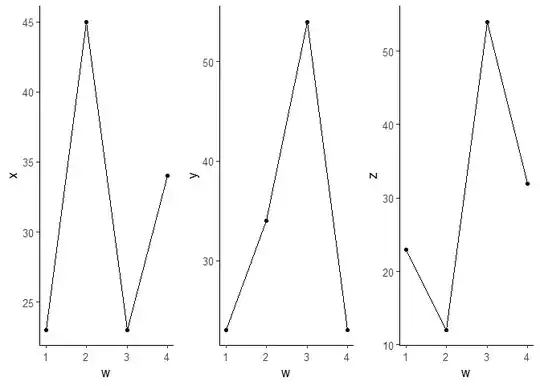

These are the images from the notebook and Audacity: