I am implementing the nqueens min-conflict search as mentioned by

Norvig, S., & Peter, J. R. and. (2014). Artificial Intelligence A Modern Approach. In Pearson (Vol. 58, Issue 12).

The authors mention this heuristic alone is super efficient:

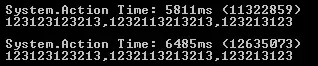

Yet when I implement it I cannot solve an over 5000 in less than a min. While the authors speed of million-queens in 50 iterations, my implementation often goes over the 1000 iterations for 5000-queens. Another question mentions similar result.

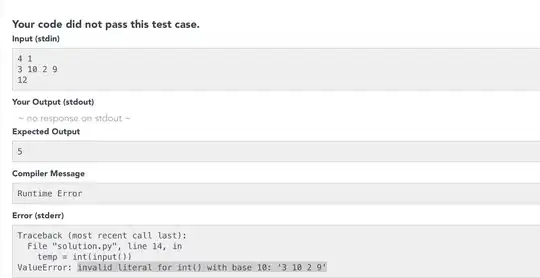

Any ideas on what I am doing wrong? Is it algorithmic or am I using a loop where I shouldn't?

update() is the main method by the way.

list<unsigned> rowsInConflict(const unsigned currentState[]) {

list<unsigned> rowsInConflict; //TODO change to map

for (unsigned row = 0; row < boardSize; row++) {

for (unsigned otherRow = 0; otherRow < boardSize; otherRow++) {

if (isConflict(row, currentState[row], otherRow, currentState[otherRow])) {

rowsInConflict.push_front(row);

debug("row in conflict " + to_string(row));

rowsInConflict.push_front(otherRow);

//return rowsInConflict;

}

}

}

return rowsInConflict;

}

bool update(unsigned currentState[]) {

unsigned randomRow, column;

list<unsigned> conflictRows = rowsInConflict(currentState);

if (conflictRows.empty()) {

return true;

}

list<unsigned>::iterator it = conflictRows.begin();

std::advance(it, rand() % conflictRows.size());

randomRow = (unsigned) *it;

column = getMinConflictColumn(currentState, randomRow);

placeQueen(currentState, randomRow, column);

return false;

}

void solve_nqueen(unsigned size, bool isVerbose) {

unsigned rowSpace[size];

while (!update(rowSpace)) {

if (iterations >= 1000) {

cout << "Random restart." << endl;

intialize(rowSpace);

iterations = 0;

}

iterations++;

}

printBoard(rowSpace);

}

};