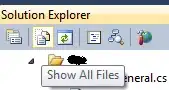

I implemented the Softmax function and later discovered that it has to be stabilized in order to be numerically stable (duh). And now, it is again not stable because even after deducting the max(x) from my vector, the given vector values are still too big to be able to be the powers of e. Here is the picture of the code I used to pinpoint the bug, vector here is sample output vector from forward propagating:

We can clearly see that the values are too big, and instead of probability, I get these really small numbers which leads to small error which leads to vanishing gradients and finally making the network unable to learn.