I am playing around with multiprocessing in Python 3 to try and understand how it works and when it's good to use it.

I am basing my examples on this question, which is really old (2012).

My computer is a Windows, 4 physical cores, 8 logical cores.

First: not segmented data

First I try to brute force compute numpy.sinfor a million values. The million values is a single chunk, not segmented.

import time

import numpy

from multiprocessing import Pool

# so that iPython works

__spec__ = "ModuleSpec(name='builtins', loader=<class '_frozen_importlib.BuiltinImporter'>)"

def numpy_sin(value):

return numpy.sin(value)

a = numpy.arange(1000000)

if __name__ == '__main__':

pool = Pool(processes = 8)

start = time.time()

result = numpy.sin(a)

end = time.time()

print('Singled threaded {}'.format(end - start))

start = time.time()

result = pool.map(numpy_sin, a)

pool.close()

pool.join()

end = time.time()

print('Multithreaded {}'.format(end - start))

And I get that, no matter the number of processes, the 'multi_threading' always takes 10 times or so as much as the 'single threading'. In the task manager, I see that not all the CPUs are maxed out, and the total CPU usage is goes between 18% and 31%.

So I try something else.

Second: segmented data

I try to split up the original 1 million computations in 10 batches of 100,000 each.

Then I try again for 10 million computations in 10 batches of 1 million each.

import time

import numpy

from multiprocessing import Pool

# so that iPython works

__spec__ = "ModuleSpec(name='builtins', loader=<class '_frozen_importlib.BuiltinImporter'>)"

def numpy_sin(value):

return numpy.sin(value)

p = 3

s = 1000000

a = [numpy.arange(s) for _ in range(10)]

if __name__ == '__main__':

print('processes = {}'.format(p))

print('size = {}'.format(s))

start = time.time()

result = numpy.sin(a)

end = time.time()

print('Singled threaded {}'.format(end - start))

pool = Pool(processes = p)

start = time.time()

result = pool.map(numpy_sin, a)

pool.close()

pool.join()

end = time.time()

print('Multithreaded {}'.format(end - start))

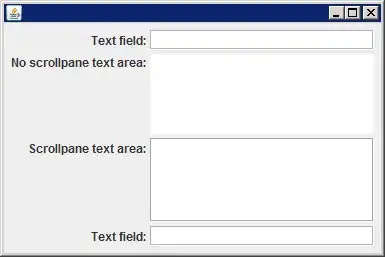

I ran this last piece of code for different processes p and different list length s, 100000and 1000000.

At least now the task Manager gives the CPU maxed out at 100% usage.

I get the following results for the elapsed times (ORANGE: multiprocess, BLUE: single):

So multiprocessing never wins over the single process.

Why??