I would like to perform update and insert operation using spark please find the image reference of existing table

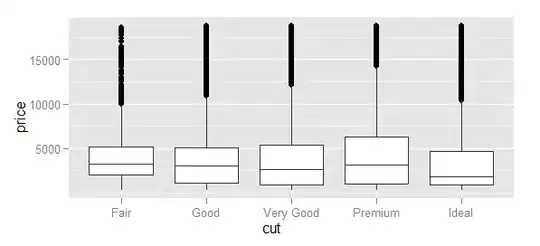

Here i am updating id :101 location and inserttime and inserting 2 more records:

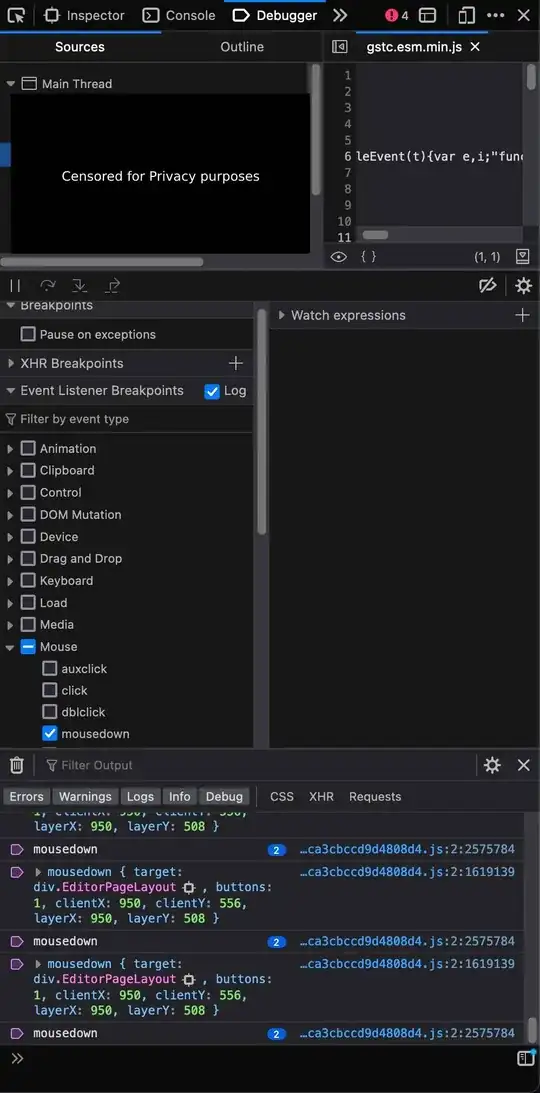

and writing to the target with mode overwrite

df.write.format("jdbc")

.option("url", "jdbc:mysql://localhost/test")

.option("driver","com.mysql.jdbc.Driver")

.option("dbtable","temptgtUpdate")

.option("user", "root")

.option("password", "root")

.option("truncate","true")

.mode("overwrite")

.save()

After executing the above command my data is corrupted which is inserted into db table

Data in the dataframe

Could you please let me know your observations and solutions