Just to start off, "it must use regular expression" doesn't make any sense without a reason - for your purposes a few splits are going to be much faster to figure out and probably similar in how robust it is. That being said...

If you want to use regex to parse these syslog-like messages, you simply need to figure out at least 3 of the 4 formats, and then stick them together with (named) groups.

We hope to end up with something like:

re_log = rf'(?P<date>{re_date}) (?P<device>{re_device}) (?P<source>{re_source}): (?P<message>{re_message})'

Note the spaces between the groups, and the colon.

Since the message is unlikely to follow any usable pattern, that will have to be our wildcard:

re_message = r'.*'

Likewise, the device is hopefully a valid device id or hostname, (e.g. no spaces, just alphanum and dashes):

re_device = r'[\w-]+'

We could use datetime or time or some parsing to get a formal parse for the date, but we don't really care, so lets match your format roughly. We don't know if your log format uses leading zeroes or leaves them out, so we allow either:

re_date = r'\w{3} \d{1,2} \d{1,2}:\d{2}:\d{2}'

The source is a bit unstructured, but as long as it doesn't have a space we can just match on everything since we have the colon in the re_log expression to capture it:

re_source = r'[^ ]+'

In the end, trying it out gives us something we can apply to your messages

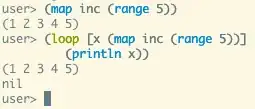

>>> import re

>>> eg = "Oct 23 18:08:26 syntax logrotate: ALERT exited abnormally with [1]"

>>> m = re.match(re_log, eg)

>>> m.groupdict()

{'date': 'Oct 23 18:08:26',

'device': 'syntax',

'source': 'logrotate',

'message': 'ALERT exited abnormally with [1]'}