On my laptop, I have MatLab and also the Parallel Toolbox, and I want to perform the following task (I have 12 physical cores): compute the largest eigenvalue of 6 different (large) matrices using a parfor loop

I have two possibilities:

2 cores work for the first matrix (A1), 2 cores for the second matrix (A2),..., 2 cores for the sixth matrix (A6).

6 cores for the first matrix (A1), 6 cores for the second matrix (A2). Then I have another iteration of the loop and use 6 cores for the third (A3) and six for (A4), and with another iteration I can compute the spectral radius also for the last two matrices.

Let's focus on the first approach: with the parallel toolbox, how can I impose that "the first two cores work on the first matrix A1" and then "the third and fourth work on A2", and so on?

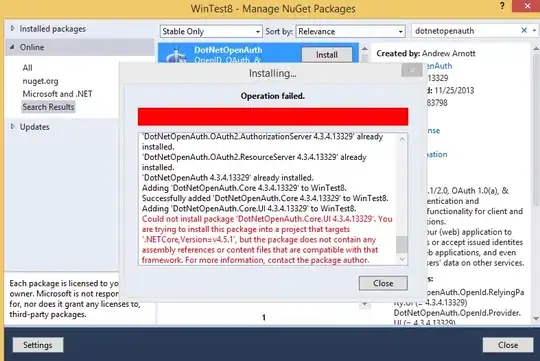

Here's what I thought: I would specify (in the toolbox), NumWorkers = 6 and NumThreads = 2, as done in the following image:

and then I would write the following parfor:

%initialize six large random matrices A(1),...,A(6)

x = zeros(6,1);

parfor i=1:6

x(i) = max(abs(eig(A(i))));

end

I would like that now, for i=1, my first two cores work on the the computation of x(1), and so on. Is it the right way?