I'm learning about __shared__ memory in CUDA, and I'm confused about how Nsight Compute shows shared memory statistics.

I'm going through this article (code available on Nvidia's github here, but copied below for reference).

#include <stdio.h>

__global__ void staticReverse(int *d, int n)

{

__shared__ int s[64];

int t = threadIdx.x;

int tr = n-t-1;

s[t] = d[t];

__syncthreads();

d[t] = s[tr];

}

__global__ void dynamicReverse(int *d, int n)

{

extern __shared__ int s[];

int t = threadIdx.x;

int tr = n-t-1;

s[t] = d[t];

__syncthreads();

d[t] = s[tr];

}

int main(void)

{

const int n = 64;

int a[n], r[n], d[n];

for (int i = 0; i < n; i++) {

a[i] = i;

r[i] = n-i-1;

d[i] = 0;

}

int *d_d;

cudaMalloc(&d_d, n * sizeof(int));

// run version with static shared memory

cudaMemcpy(d_d, a, n*sizeof(int), cudaMemcpyHostToDevice);

staticReverse<<<1,n>>>(d_d, n);

cudaMemcpy(d, d_d, n*sizeof(int), cudaMemcpyDeviceToHost);

for (int i = 0; i < n; i++)

if (d[i] != r[i]) printf("Error: d[%d]!=r[%d] (%d, %d)\n", i, i, d[i], r[i]);

// run dynamic shared memory version

cudaMemcpy(d_d, a, n*sizeof(int), cudaMemcpyHostToDevice);

dynamicReverse<<<1,n,n*sizeof(int)>>>(d_d, n);

cudaMemcpy(d, d_d, n * sizeof(int), cudaMemcpyDeviceToHost);

for (int i = 0; i < n; i++)

if (d[i] != r[i]) printf("Error: d[%d]!=r[%d] (%d, %d)\n", i, i, d[i], r[i]);

}

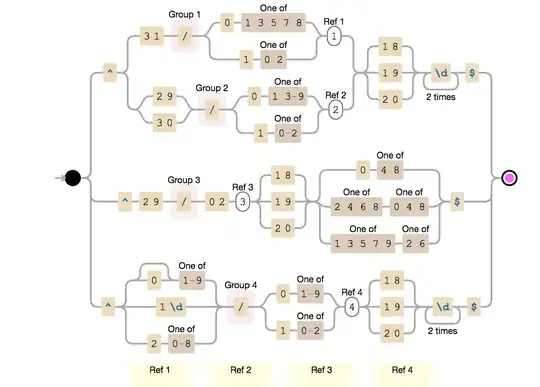

When I run Nsight Compute, I see the following chart for the staticReverse kernel (the dynamicReverse kernel is almost identical):

Question 1: The chart shows 1 request to and 1 request from shared memory, but why does it also show 0 shared memory instructions? Does a request not count as an instruction? From this chart's perspective, what counts as a shared memory instruction?

Next, in the source view, Nsight Compute shows line-by-line counts for various metrics:

Question 2: Why does "Memory L1 Transactions Shared" show 0 for lines 8 and 10? I was expecting to see:

- Line 8: an equal number of [load transactions from global memory] and [store transactions to shared memory]

- Line 10: an equal number of [load transactions from shared memory] and [store transactions to global memory]

Question 3: Why are there 8 memory transactions each for lines 8 and 10?

My system:

- Ubuntu 18.04 LTS

- GeForce 1070 (Pascal)

- CUDA Version: 10.2

- Driver Version: 440.64.00