I'm running succesfully a client web page that act as a voice message sender, using MediaRecorder APIs:

- when the user press any key, start an audio recording,

- when the key is released, the audio recording is sent, via soketio, to a server for further processing.

This is a sort of PTT (Push To Talk) user experience, where the user has just to press a key (push) to activate the voice recording. And afterward he must release the key to stop the recording, triggering the message send to the server.

Here a javascript code chunk I used:

navigator.mediaDevices

.getUserMedia({ audio: true })

.then(stream => {

const mediaRecorder = new MediaRecorder(stream)

var audioChunks = []

//

// start and stop recording:

// keyboard (any key) events

//

document

.addEventListener('keydown', () => mediaRecorder.start())

document

.addEventListener('keyup', () => mediaRecorder.stop())

//

// add data chunk to mediarecorder

//

mediaRecorder

.addEventListener('dataavailable', event => {

audioChunks.push(event.data)

})

//

// mediarecorder event stop

// trigger socketio audio message emission.

//

mediaRecorder

.addEventListener('stop', () => {

socket.emit('audioMessage', audioChunks)

audioChunks = []

})

})

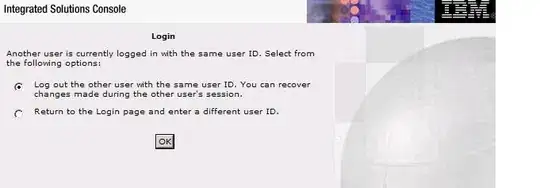

Now, What I want is to activate/deactivate the audio(speech) recording not only from a web page button/key/touch, but from an external hardware microphone (with a Push-To-Talk button). More precisely, I want to interface an industrial headset with PTT button on the ear dome, see the photo:

BTW, the PTT button is just a physical button that act as short-circuit toggle switch, as in the photo, just as an example:

- By default the microphone is grounded and input signal == 0

- When the

PTTbutton is pressed, the micro is activated and input signal != 0.

Now my question is: how can I use Web Audio API to possibly detect when the PTT button is pressed (so audio signal is > 0) to do a mediaRecorder.start() ?

reading here: I guess I have to use the stream returned by mediaDevices.getUserMedia and create an AudioContext() processor:

navigator.mediaDevices.getUserMedia({ audio: true, video: false })

.then(handleSuccess);

const handleSuccess = function(stream) {

const context = new AudioContext();

const source = context.createMediaStreamSource(stream);

const processor = context.createScriptProcessor(1024, 1, 1);

source.connect(processor);

processor.connect(context.destination);

processor.onaudioprocess = function(e) {

// Do something with the data,

console.log(e.inputBuffer);

};

};

But what the processor.onaudioprocess function must do to start (volume > DELTA) and stop (volume < DELTA) the MediaRecorder?

I guess the volume detection could be useful for two situation:

- With

PTTbutton, where the user explicitly decide the duration of the speech, pressing and releasing the button - Without the

PTTbutton, in this case the voice message is created with the so calledVOXmode (continous audio processing)

Any idea?